Index

Overclocking, Consumption and Thermals

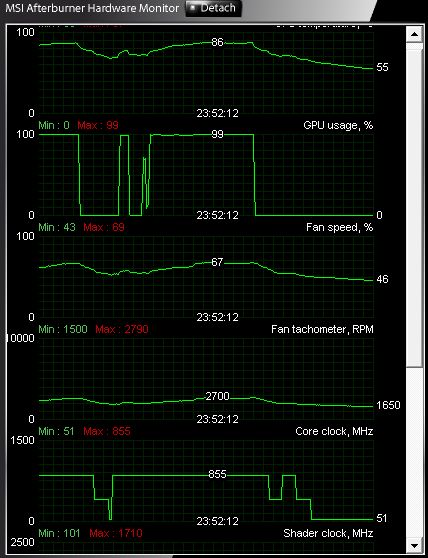

As our results readily confirm – GTX 580 packs plenty of OC potential. We managed to hit 855MHz for the GPU and 1190MHz (4760MHz effectively), all without meddling with voltages or changing the fan speed from AUTO mode. Reference clocks for this card are 772MHz for the GPU and 4008MHz for the memory. We heard that most GTX 580 can be clocked up to 890MHz by adding voltage but we will check this later on.

The fan isn’t too loud during intensive gaming and we can finally say that we’re pleased with the noise levels. Speeding up the fan didn’t significantly contribute to overclocking headroom. MSI’s Afterburner v.2.0 allowed us to set the fan at maximum 85% RPM, where we were able to push the GPU to 860MHz. MSI’s Afterburner beta does support changing voltages and we should play with it later.

Nvidia uses a new technology dubbed Advanced Power Management on the GTX 580. It is used for monitoring power consumption and performing power capping in order to protect the card from excessive power draw.

Dedicated hardware circuity on the GTX 580 graphics card performs real time monitoring of current and voltage. The graphics driver monitors the power levels and will dynamically adjust performance in certain stress appllications such as FurMark and OCCT if power levels axceed the cards spec.

Power monitoring adjust performance only if power specs are exceeded and if the application is one of the apps Nvidia has defined in their driver to monitor such as FurMark and OCCT. This should not significantly affect gaming performance, and Nvidia indeed claims that no game so far has managed to wake this mechanism from its slumber. For now, it is not possible to turn off power capping.

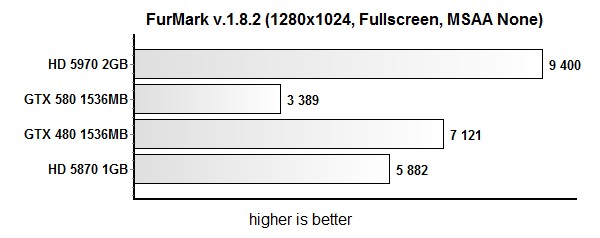

GTX 580’s power caps are set close to PCI Express spec for each 12V rail (6-pin, 8-pin, and PCI Express). Once power-capping goes active, chip clocks go down by 50%. It seems that this is the reason why GTX 480 scores pretty bad compared to GTX 480 in FurMark.

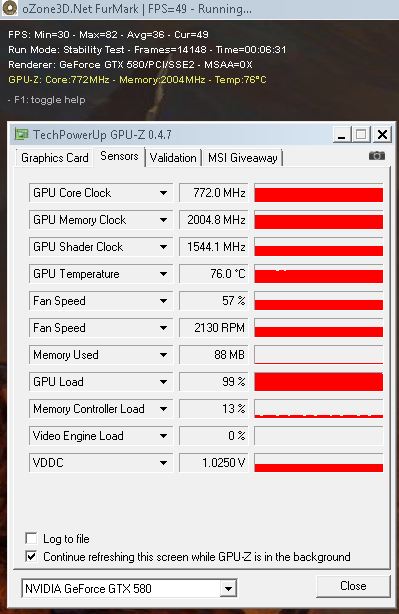

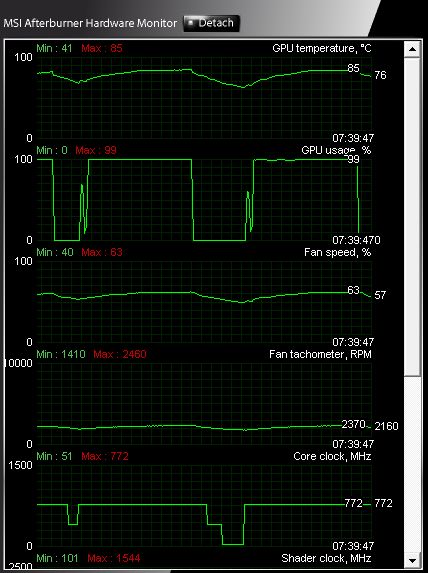

FurMark temperatures didn’t go over 76 °C, which isn’t very realistic – in gaming tests we measured up to 85°C, which seems to suggest that Nvidia overdid the preventive measures.

GPUZ 0.4.7 doesn’t show downclocking during FurMark, but the new version is set to change that. Below you see the GPU temperature graph we captured during Aliens vs. Predator tests.

After overclocking, temperatures were at the same level as before. The fan was a bit louder, but still not too loud.

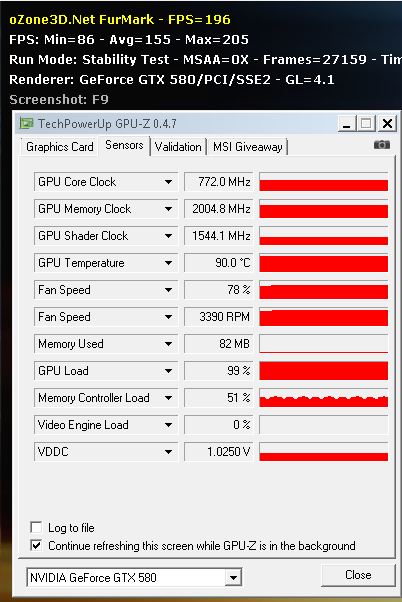

The older FurMark, version 1.6, shows that GPU can hit 90°C.

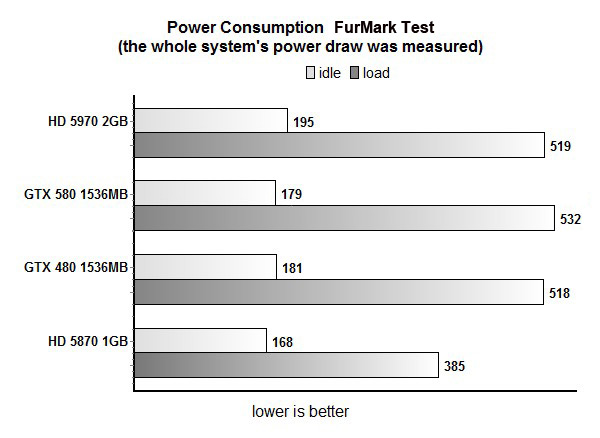

Consumption is on par with the GTX 480. You’ll find older FurMark test resulst below, because in new FurMark tests our rig didn’t consume more than 367W. During gaming we measured rig consumption of about 450W.