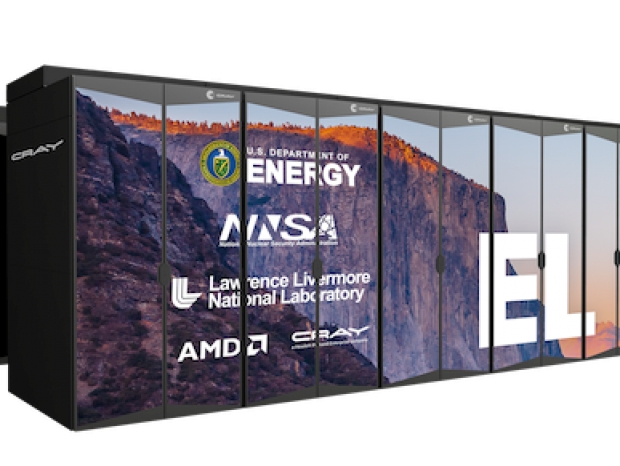

El Capitan will be the US’s second exascale system powered by AMD CPUs and GPUs and is expected to be more powerful than today’s 200 fastest supercomputers combined and 10x faster than the world’s current fastest supercomputer.

El Capitan will support National Nuclear Security Administration requirements for its primary mission of ensuring the safety, security and reliability of the nation’s nuclear stockpile. The AMD based nodes will be optimised to accelerate artificial intelligence and machine learning workloads that benefit NNSA missions.

The system features next generation AMD EPYC processors, codenamed “Genoa” featuring the “Zen 4” processor core, next generation AMD Radeon Instinct GPUs based on a new compute optimised architecture, 3rd Gen AMD Infinity Architecture and open source AMD ROCm heterogeneous computing software.

With a projected delivery of early 2023, the El Capitan system will follow the 2021 delivery of the AMD-powered Frontier exascale-class supercomputer that was announced in May 2019 with 1.5 exaflops of expected processing performance.

LLNL Lab Director Bill Goldstein said: “This unprecedented computing capability, powered by advanced CPU and GPU technology from AMD, will sustain America’s position on the global stage in high performance computing and provide an observable example of the commitment of the country to maintaining an unparalleled nuclear deterrent.”

The GPUs will provide the majority of the peak floating-point performance of El Capitan. This enables LLNL scientists to run high-resolution 3D models quicker, as well as increase the fidelity and repeatability of calculations, thus making those simulations truer to life.

Anticipated to be one of the most capable supercomputers in the world, El Capitan will have a significantly greater per-node capability than any current systems, LLNL researchers said.

El Capitan’s graphics processors will be amenable to AI and machine learning-assisted data analysis, further propelling LLNL’s sizable investment in AI-driven scientific workloads. These workloads will supplement scientific models that researchers hope will be faster, more accurate and intrinsically capable of quantifying uncertainty in their predictions, and will be increasingly used for stockpile stewardship applications. The use of AMD’s GPUs also is anticipated to dramatically increase El Capitan’s energy efficiency as compared to systems using today’s graphical processors.

Forrest Norrod, senior vice president and general manager, Datacenter and Embedded Systems Group, AMD said: “AMD is enabling the NNSA Tri-Lab community — LLNL, Los Alamos and Sandia national laboratories — to achieve their mission-critical objectives and contribute new AI advancements to the industry.

We are extremely proud to continue our exascale work with HPE and NNSA and look forward to the delivery of the most powerful supercomputer in the world, expected in early 2023.”

El Capitan also will integrate many advanced features that are not yet widely deployed, including HPE’s advanced Cray Slingshot interconnect network, which will enable large calculations across many nodes, an essential requirement for the NNSA laboratories’ simulation workloads.

In addition to the capabilities that Cray Slingshot provides, HPE and LLNL are partnering to actively explore new HPE optics technologies that integrate electrical-to-optical interfaces that could deliver higher data transmission at faster speeds with improved power efficiency and reliability. El Capitan also will feature the new Cray Shasta software platform, which will have a new container-based architecture to enable administrators and developers to be more productive, and to orchestrate LLNL’s complex new converged HPC and AI workflows at scale.