Index

- Nvidia Geforce RTX-series is born

- Turing architecture and RTX series

- The new Turing architecture in more details

- Shader improvements and GDDR6 memory

- Nvidia RTX Ray Tracing and DLSS

- The Geforce GTX 2080 Ti and GTX 2080 graphics cards

- Test Setup

- First performance details, UL 3DMark

- Shadow of the Tomb Raider, Assassin’s Creed: Origins

- The Witcher 3, Battlefield 1

- F1 2018, Wolfenstein II: The New Colossus

- Power consumption, temperatures and overclocking

- Conclusion

- All Pages

Review: Ray-tracing, plenty of performance and bright future for gaming

Back at a dedicated event during Gamescom 2018 in Cologne, Germany, Nvidia officially unveiled the new Geforce RTX series and the Turing GPU, but only gave a glimpse of what is coming and what it will mean for future gaming, and that future is bright in our book.

In case you missed it back then, when Jensen Huang took the stage, the Nvidia RTX series is based on a brand new Turing architecture, which, in Nvidia words, “reinvents graphics”, by including some new parts of the GPU, like the RT and Tensor Cores, new AI features, advanced shading, and pushing game development in a new direction and future, one that is not only exciting for gamers but even seem to be pulling a lot of game developers to its side.

Despite the lack of competition, which is mostly to blame for every shortcoming of the new Nvidia Geforce RTX series, Nvidia is pushing hard to bring new features, some of which will take time to actually become viable, raising the performance level, as well as pushing game development in a new direction and bringing a new level of graphics and reality to PC games.

Turing architecture and RTX series

Before we get into deeper details, in case you missed the original announcement, the Nvidia Geforce RTX series is based on the new Turing GPU, a gaming-oriented GPU which has elements of Nvidia’s Volta GPU.

Built on a 12nm manufacturing process, the Turing GPU is the second biggest chip Nvidia ever made+ at 18 billion transistors, just after the Volta GV100 one.

As noted earlier, the Turing GPU has a complex design, one which consists of standard streaming multiprocessors (SMs), completely redesigned for the sake of RTX series, as well as specialty RT and Tensor Cores, which are used for specific Ray Tracing and AI-oriented tasks, but we will get to that a bit later.

Nvidia has announced a trio of RTX-series graphics cards, some of which will be available as of tomorrow, September 20th, including the Geforce RTX 2070, Geforce RTX 2080, and the Geforce RTX 2080 Ti.

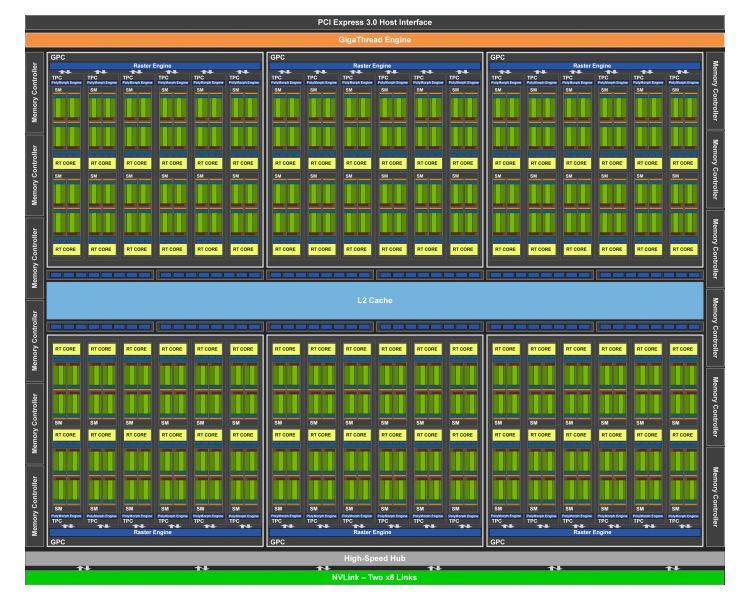

The flagship Geforce RTX 2080 Ti is based on the Turing TU102 GPU and packs a total of 4,352 CUDA cores (in six graphics processing clusters (GPCs), 68 streaming multiprocessors (SMs) and 34 TPCs), 72 RT Cores, a total of 544 Tensor Cores (eight per SM).

It packs 11264MB of GDDR6 14Gbps memory on a 352-bit memory interface for a total memory bandwidth of 616Gbps. Bear in mind that the GTX 2080 Ti uses a cut-down TU102 GPU, while the fully-enabled one, with 4,608 CUDA cores, 576 Tensor Cores and 72 RT cores, is reserved for the Quadro RTX 6000.

The Nvidia Geforce RTX 2080 is based on the Turing TU104 GPU, and it is still not a fully-enabled TU104, which leaves a bit room for a possible different SKU based on the same GPU. The TU104 for the Geforce RTX 2080 still features six GPCs, but it has two SMs per GPC disabled, leaving it with a total of 2,944 CUDA cores, 46 RT Cores, and 368 Tensor cores. It comes with 8GB of GDDR6 14Gbps memory on a 256-bit wide memory interface, leaving it with a memory bandwidth of 448Gbps.

The GTX 2070, which comes at a later date, is based on a smaller TU106 GPU, and while there was room to further cut-down the TU104 GPU, it appears that Nvidia is leaving room for a possible RTX 2070 Ti as well.

The TU106 GPU is pretty much the half of TU102 GPU, as it has three GPCs, each with 12 SMs, leaving the RTX 2070 with 2,304 CUDA cores, 36 RT cores, and 288 Tensor Cores. It is also worth to note that TU106 lacks NVLink interface, which is a new higher-bandwidth interface for connecting multiple (read two) graphics cards.

The new Turing architecture in more details

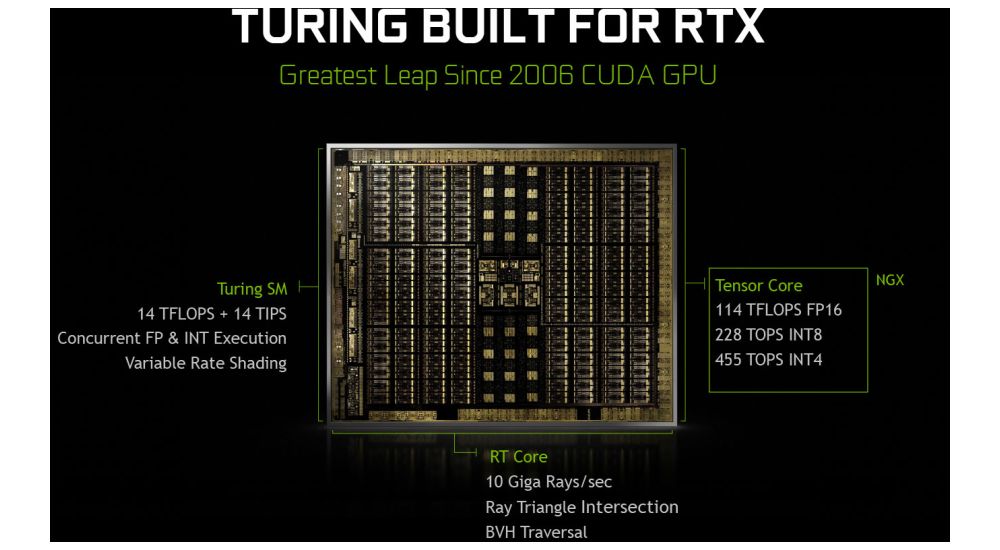

The Turing GPU has a complex design, which brings a new core architecture, Tensor and RT Cores, as well as advanced shading, Nvidia NGX for deep learning, NVLink, USB-C and Virtual Link, and more.

The Turing GPU is based on 12nm FNN manufacturing process, and at 18 billion transistors, it is the second biggest GPU Nvidia made.

The fully-enabled TU102 GPU features a total of six Graphics Processing Clusters (GPCs), 36 Texture Processing Clusters (TPCs), and 72 Streaming Multiprocessors (SMs). Each GPC comes with its dedicated raster engine and six TPCs.

Each TPC comes with two SMs, each with 64 CUDA cores, eight Tensor Cores, a 256KB register file, four texture units and 96KB of L1/shared memory, as well as an RT core. When compared to the Pascal GPU, Nvidia raised the L1 cache bandwidth and lower the L1 latency, by using the shared 96KB memory and also doubled the L2 cache to 6MB per TPC.

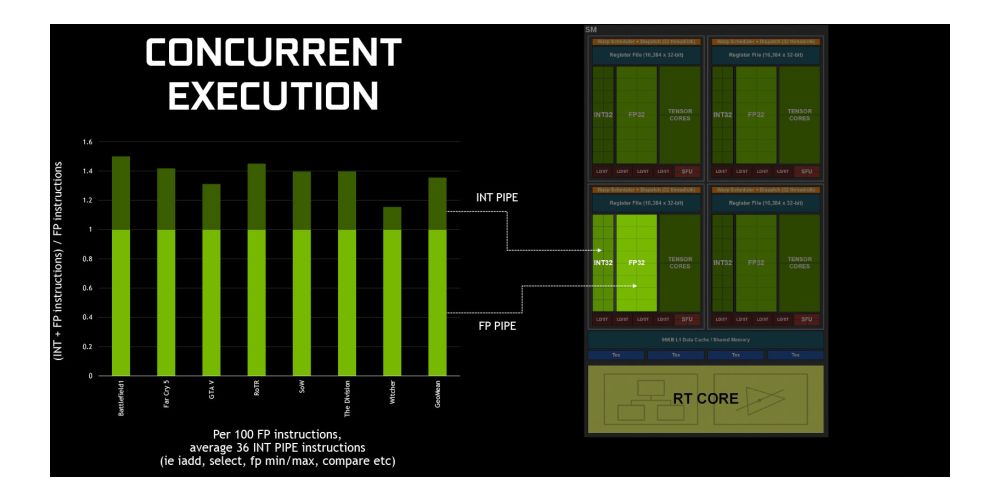

The new Turing Streaming Multiprocessor (SM) pulls some features from the Volta architecture and each SM is split into four blocks, each with 16 FP32 Cores, 16 INT32 Cores, two Tensor Cores, warp scheduler, and dispatch unit, with each block coming with L0 instruction cache and a 64KB register file.

While the Pascal GPU stuck with one SM per TPC and 128 FP32 cores per SM, the Turing SM actually support concurrent execution of FP32 and IN32 instructions. According to Nvidia, modern applications usually have 36 integer pipe (INT) instructions for every 100 floating point (FP) instructions, so by moving them to a separate pipe, translates to an effective 36 percent addition throughput for floating point.

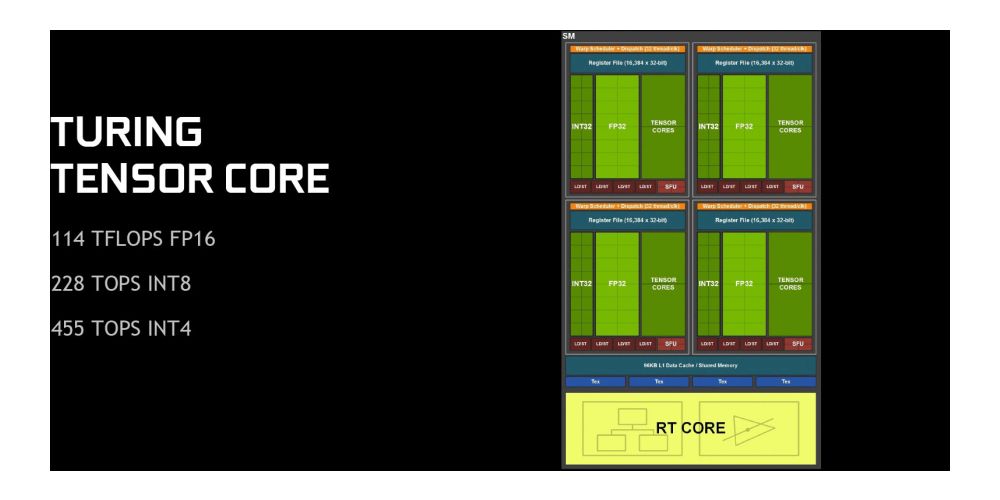

Nvidia has brought the Tensor Cores from its Volta architecture to Turing, and these add INT8 and INT4 precision modes for the so-called inference workloads, as well as full support FP16 workloads for higher precision. More importantly, the Tensor Cores are what allows the Turing GPU to take advantage of Nvidia’s AI-based features in its NGX Neural Services, which include the Deep Learning Super Sampling (DLSS), AI InPainting, AI Super Rez, and AI Slow-Mo.

In terms of numbers, each tensor core is capable of delivering 455 TOPS INT4, 228 TOPS INT8, and 114 TFLOPs of FP16 compute performance.

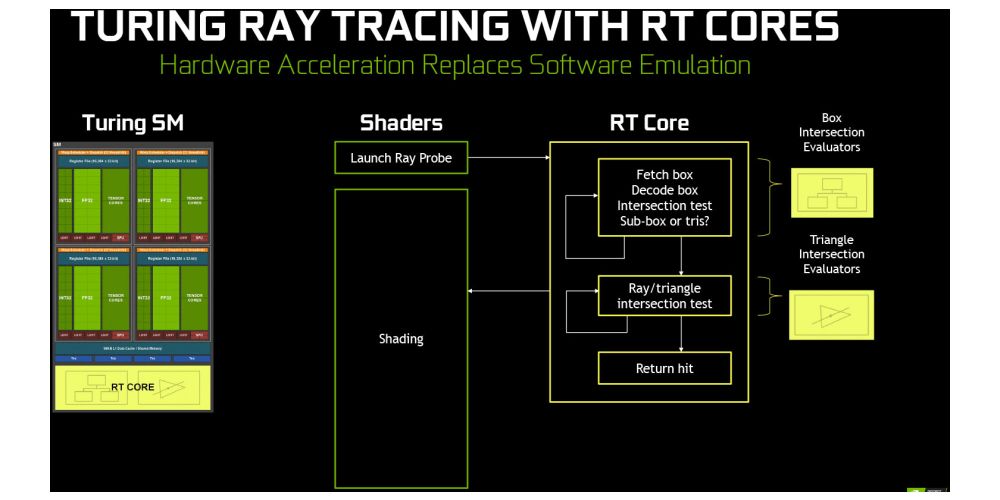

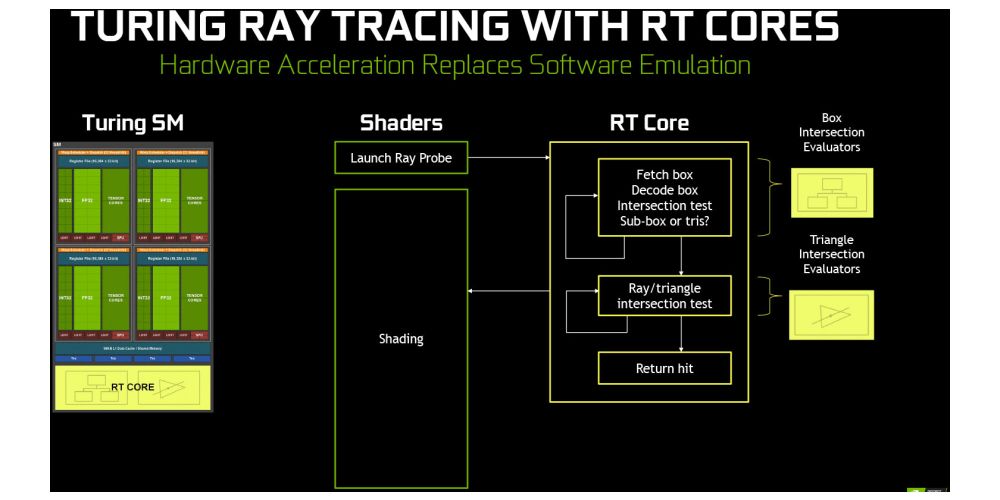

Each TPC inside the Turing GPU also comes with an RT Core, a dedicated part of the Turing GPU which should bring the holy grail of graphics, Ray Tracing, to the gaming market. Of course, even dedicated RT Cores would not bring a fully Ray Traced scene, which takes a lot of GPU power, but rather what Nvidia calls hybrid rendering, or a combination of Ray Tracing, or to be precise Ray Tracing specific effects, like global illumination, ambient occlusion, shadows, reflections, and refractions; and standard rasterization rendering technique.

While we are yet to see any actual implementation of Nvidia RTX Ray Tracing, other than a demo, future UL Ray Tracing benchmark, or the demos we saw during Gamescom 2018 with Battlefield V, Shadow of the Tomb Raider, Metro Exodus, and some other games, these, as well as the excitement from developers, suggest that this should bring both incredible eye-candy and level of realism to future games.

Shader improvements and GDDR6 memory

In addition to architecture improvements, Nvidia also brought a few new shader improvements with the Turing GPU, including Mesh Shading, Variable Rate Shading (VRS), Texture-Space Shading, Multi-View Rendering, and more.

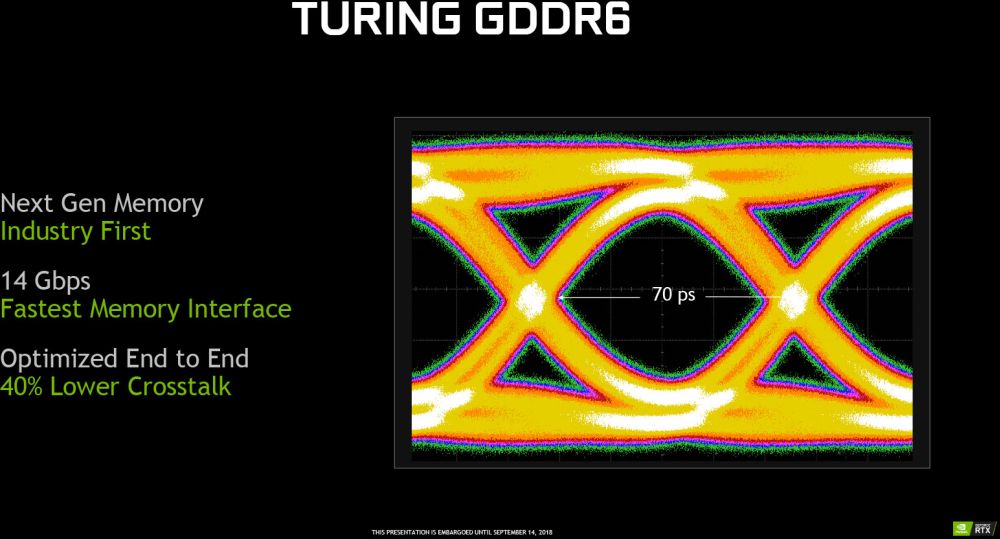

The Turing-based graphics cards will also be the first, and currently the only gaming graphics cards with GDDR6 memory, which not only brings higher bandwidth but several other improvements like power efficiency and reduction in signal crosstalk.

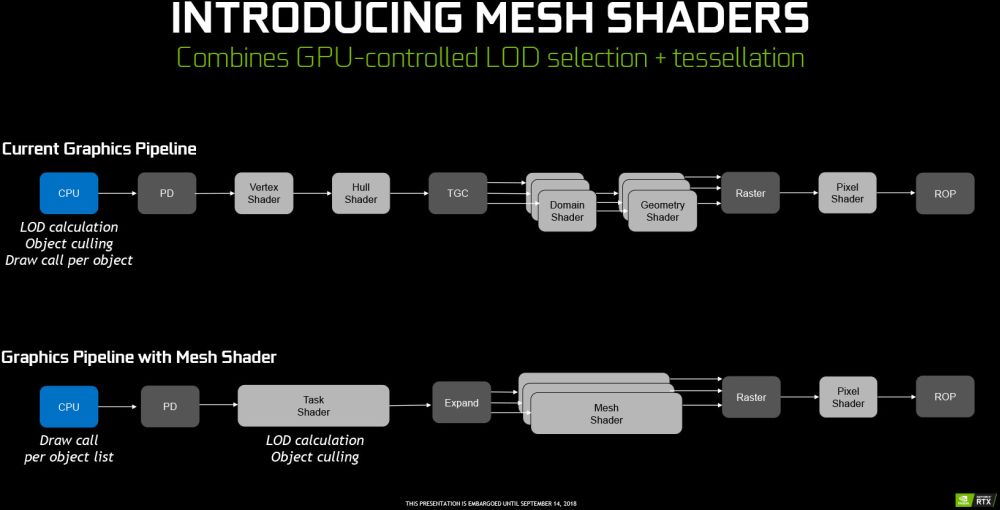

The most noticeable improvement in shading is Mesh Shading, a new shader model for the vertex, tessellation, and geometry shading stage which provides more flexible and efficient approach, by offloading object list processing from the CPU to the GPU mesh shading as well as enabling new algorithms for advanced geometric synthesis and object LOD management.

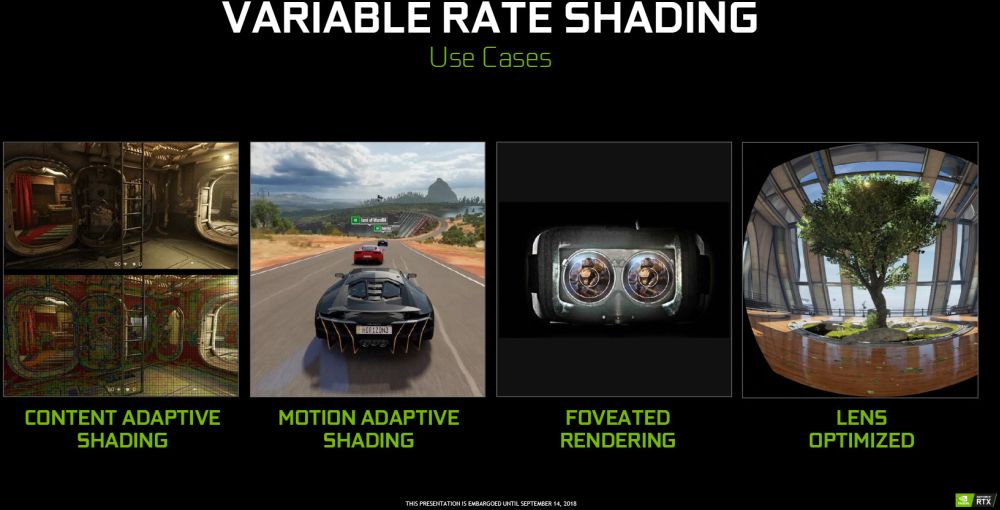

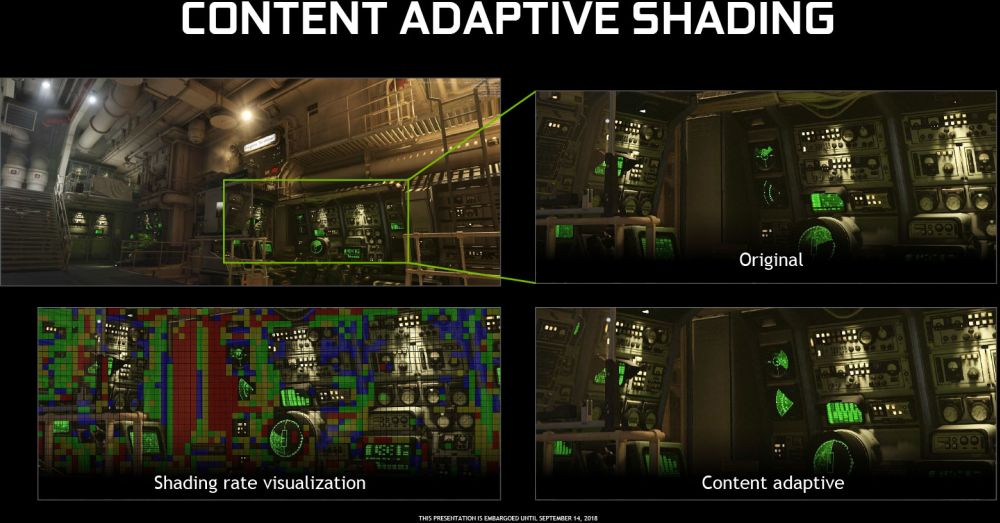

The Variable Rate Shading (VRS) allows developers to control shading rate dynamically, shading as little as once per sixteen pixels or as often as eight times per pixel. This is an evolution of the previously available techniques like Multi-Resolution Shading (MRS) and Lens-Matched Shading (LMS). Nvidia has now brought three new VRS-based algorithms including Content Adaptive Shading, which reduces shading rate in regions of slowly changing color, Motion Adaptive Shading, which decreases shading rate on moving objects, and Foveated Rendering, which reduces shading rate in areas which are not in viewers focus, and quite important in VR.

With texture-space shading (TSS), objects are shaded in a private coordinate space (a texture space) that is saved to memory, and pixel shaders sample from that space rather than evaluating results directly. With the ability to cache shading results in memory and reuse/resample them, developers can eliminate duplicate shading work or use different sampling approaches that improve quality.

Multi-View Rendering (MVR) is an extension to the Single Pass Stereo feature, which now allows rendering of multiple views in a single pass even if the views are based on totally different origin positions or view directions, especially important to accelerate Virtual Reality (VR) rendering.

The Nvidia Turing GPU is the first to utilize the GDDR6 memory and it brings several improvements in terms of speed, power efficiency, and noise reduction. Running at 14Gbps signaling rate, the GDDR6, which was developed in close cooperation with the DRAM industry, should bring increased performance while lowering power consumption by 20 percent and reducing signal crosstalk by 40 percent, at least compared to the GDDR5X memory used with Pascal GPUs.

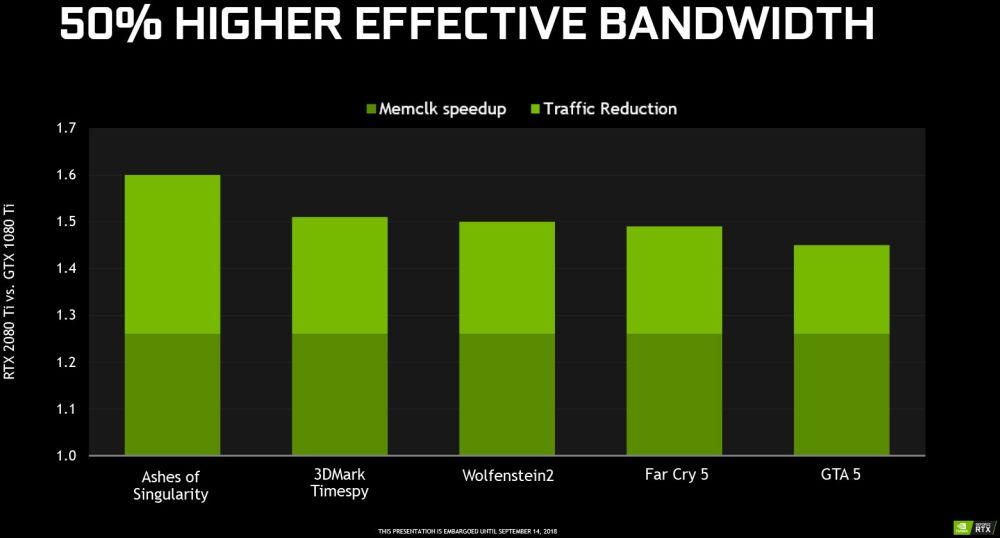

Nvidia also improved the memory compression, which comes as a result of improved compression engine which uses algorithms to determine the most efficient way to compress the data based on the characteristics. In short, it reduces the amount of data written out to memory and transferred from memory to the L2 cache and reduces the amount of data transferred between clients and the frame buffer, bringing a 50 percent increase in effective bandwidth compared to the Pascal architecture.

Nvidia RTX Ray Tracing and DLSS

What make the Nvidia Geforce RTX-series so interesting are not only the advancements in sheer GPU performance but also plenty of new features like Ray Tracing and Deep Learning Super-Sampling (DLSS), which will both push the game development in a different direction, bringing a much realistic graphics to games but also offer a significant performance uplift at no cost, all thanks to RT and Tensor Cores.

Ray Tracing has been the holy grail of graphics for quite some time and while Nvidia implementation with the RTX series might not bring “the real-time Ray Tracing”, it is pushing game developers in the right direction and, thanks to RT Cores, accelerates some ray tracing operations, especially the Bounding Volume Hierarchy Traversal (BVH) and triangle intersection.

The games will still use rasterization and we won’t see a fully ray traced game for quite some time. On the other hand, RTX Ray Tracing will bring something that Nvidia calls “hybrid rendering”, where ray tracing will be used for specific effects, like global illumination, ambient occlusion, real shadows, reflections, and refractions, without the performance hit that would be seen with rasterization.

Some developers were quite excited and probably the best use scenario will be seen in 4A Games’ Metro Exodus, and they made a neat video showing what can be done with Nvidia RTX Ray Tracing. EA/DICE will also implement some elements of RTX Ray Tracing in Battlefield V, and you can check out the video showing some of those effects as well. Shadow of the Tomb Raider game also used some of these RTX Ray Tracing effects to render realistic shadows so that video can be seen below as well.

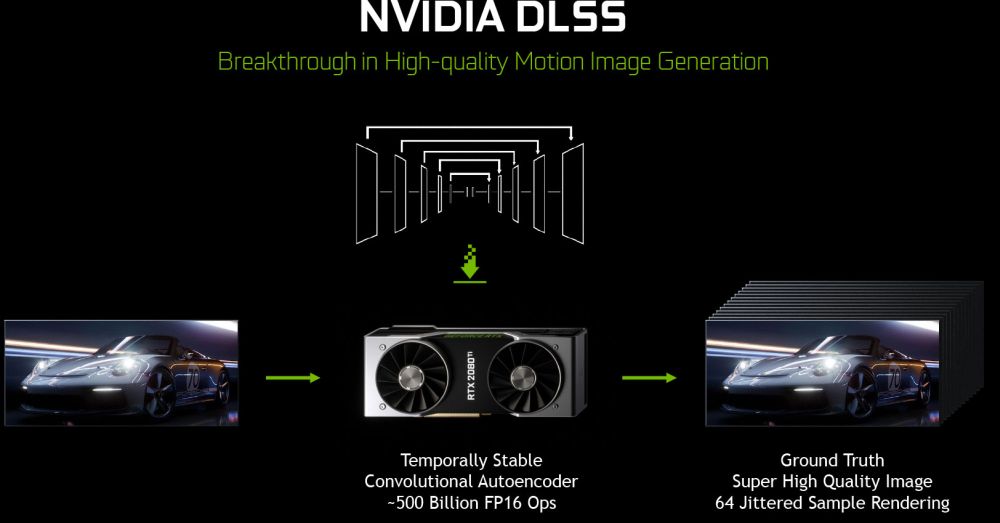

Deep Learning Super-Sampling (DLSS) is an RTX-exclusive anti-aliasing technique which uses a Nvidia-generated “ground truth” image and compiling a DLSS model, which is then pulled from the driver and processed by Tensor cores to provide better image quality than some anti-aliasing techniques (like TAA) without the actual performance impact.

According to Nvidia, in terms of image quality and performance, should bring the quality of the Temporal Anti-Aliasing (TAA) at a much lower performance impact with DLSS as well as higher quality with DLSS 2X, which uses 64x super-sampled images.

You can argue that DLSS pretty much up-scales the image but it is more of a combination of up-scaling, super-sampling, and some other techniques that should bring much better quality without performance impact.

Demos that we saw do look quite impressive but even Nvidia was still vague about what happens at different resolutions so we will have to wait and see how well it will work in games, which essentially, needs to go through Nvidia in order to get DLSS support.

The Geforce GTX 2080 Ti and GTX 2080 graphics cards

The first SKUs based on Nvidia’s new Turing architecture to hit the market are the Geforce GTX 2080 Ti and the GTX 2080, both of which were already available for pre-order and should start shipping as of September 20th. The third SKU, the Geforce RTX 2070, is coming in October.

As noted at the beginning, the flagship Geforce RTX 2080 Ti is based on the Turing TU102 GPU and packs a total of 4,352 CUDA cores, 544 Tensor Cores, and 72 RT Cores.

The GPU works at 1350MHz base and 1545MHz GPU boost frequency (1545MHz on the Founders Edition) and the card packs 11GB of GDDR6 memory, clocked at 14Gbps, on a 352-bit memory interface for a total memory bandwidth of 616Gbps.

The TDP for the RTX 2080 Ti is set at 250W and it draws power from two 8-pin PCIe power connectors.

The RTX 2080 Ti Founders Edition comes with a special dual axial-fan cooler with vapor chamber technology, which should keep the TU102 GPU well cooled. It also features a full-cover backplate.

Display connectivity includes three DisplayPort 1.4a ports, HDMI 2.0b, and a special VirtualLink port, which is a dedicated USB-C port with DisplayPort routing that allows for a single cable to both power and display for a Virtual Reality HMD.

The Nvidia Geforce RTX 2080 Ti comes with a $999 price tag for the reference and $1199 price tag for the Founders Edition, which should have higher overclocking potential as well as a better cooler compared to the reference edition.

The Geforce RTX 2080 is based on the Turing TU104 GPU and packs a total of 2,944 CUDA cores, 368 Tensor Cores, and 46 RT Cores.

The GPU works at 1515MHz base and 1710MHz GPU boost frequency (1800MHz on the Founders Edition) and the card packs 8GB of GDDR6 memory, clocked at 14Gbps, on a 256-bit memory interface, for a total memory bandwidth of 448Gbps.

The TDP for the RTX 2080 is set at 205W and it draws power from 8-pin and 6-pin PCIe power connectors.

The RTX 2080 Founders Edition uses the same cooler as the RTX 2080 Ti FE and comes with the same display outputs.

The Nvidia Geforce RTX 2080 has a $699 price tag for the reference and $799 price tag for the Founders Edition.

The Nvidia Geforce RTX 2070, which comes in October, will be based on the TU106 GPU, pack 2,304 CUDA cores, 288 Tensor Cores, and 36 RT cores, and works at 1410MHz base GPU clock, 1620MHz GPU Boost clock (1710MHz on the FE), and comes with 8GB of 14Gbps GDDR6 memory on a 256-bit memory interface, leaving it with the same memory bandwidth like the RTX 2080.

Due to a significantly cut-down GPU, the RTX 2070 has a 175W TDP, and needs a single 8-pin PCIe power connector. It will start at $499 for the reference and $599 for the Founders Edition. The RTX 2070 FE should have a similar dual axial-fan cooler like the RTX 2080 and 2080 Ti.

Bear in mind that the RTX 2070 does not support NVLink, so it will not be possible to pair two of these in a multi-GPU configuration.

Test Setup

We are in a process of changing the system which also took a lot of time to retest, which is why we did not have a chance to do as many games or detailed testing of some other features, but hopefully, we will get to that eventually.

The Nvidia Geforce RTX 2080/2080 Ti benchmarks were done with Nvidia's 411.51 press driver. This one should be the same as the new Geforce 411.63 WHQL driver posted by Nvidia.

Processor

- AMD Ryzen 7 2700 8-core/16-thread AM4 at stock 3.2GHz base - provided by AMD

Motherboard

- MSI X470 Gaming M7 AC - provided by AMD

Memory

- Corsair Vengeance LPX DDR4 16GB (2x8GB) 3000MHz @ 15-17-17-35 at 1.35V

Storage

- ADATA XPG SX8200 M.2 480GB SSD

Case

- Cooler Master MasterCase H500P - provided by Cooler Master

Cooling

- Cooler Master MasterLiquid ML240L RGB - provided by Cooler Master

Power Supply

- Cooler Master V750 - provided by Cooler Master

Graphics cards

- Nvidia RTX 2080 FE and RTX 2080 Ti FE - provided by Nvidia

- Nvidia 10-series Geforce GTX 1080 Ti FE, GTX 1080 FE, GTX 1070 Ti and GTX 1070

- Sapphire Radeon RX Vega 64 8G

Drivers

- Nvidia RTX 2080 and RTX 2080 Ti - Nvidia Geforce 411.51 press driver

- Nvidia GTX 1080 Ti, GTX 1080, GTX 1070 Ti, GTX 1070 - Geforce 399.24 WHQL

- AMD Radeon Vega 64 - 18.8.2 WHQL

First performance details, UL 3DMark

Due to a tight schedule and the logistical nightmare that usually happens when there is a hardware launch, we did not manage to check out everything so we will keep updating the article in the next couple of days as well as add a couple of other articles that will focus on Nvidia’s Geforce RTX-series.

The sheer complexity of the Turing GPU as well as the new features, which are not yet available, are simply overwhelming and we are quite sure that there will be a lot of talk about Nvidia RTX-series in the day to come as well as future games, which should bring support to some of those RTX features, start to appear.

We also focused testing only on higher resolutions, as it is highly unlikely that someone could use high-end RTX-series for lower resolution gaming as even the RTX 2080 has enough power to come close to 60fps at 4K/UHD resolution in most game titles at high graphics settings. Bear in mind that the performance difference is bigger at higher resolutions, so it could be smaller at 1080p.

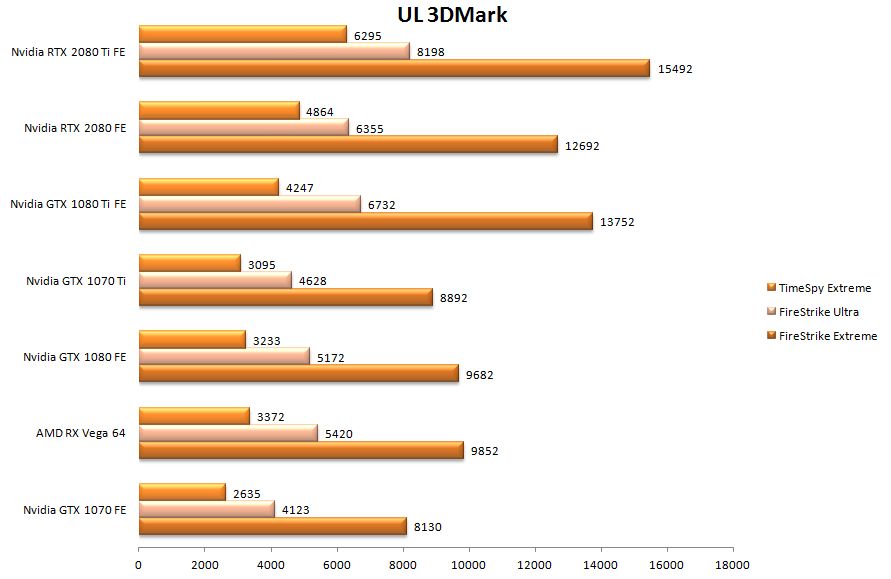

As far as UL 3DMark performance goes, the RTX 2080 Ti and the RTX 2080 Founders Edition are showing the power of a new architecture, and even without fancy new features, they outperform everything launched so far. Although not on the list, even the mighty Titan V is left behind by the new king of the hill, the Geforce RTX 2080 Ti.

The performance uplift compared to the previous Geforce GTX 10-series graphics cards is obvious and you can expect a performance gain of anywhere between 9 and 30 percent, depending on the game, and especially the resolution, at least when you compare the RTX 20-series to corresponding GTX 10-series graphics cards.

Shadow of the Tomb Raider, Assassin’s Creed: Origins

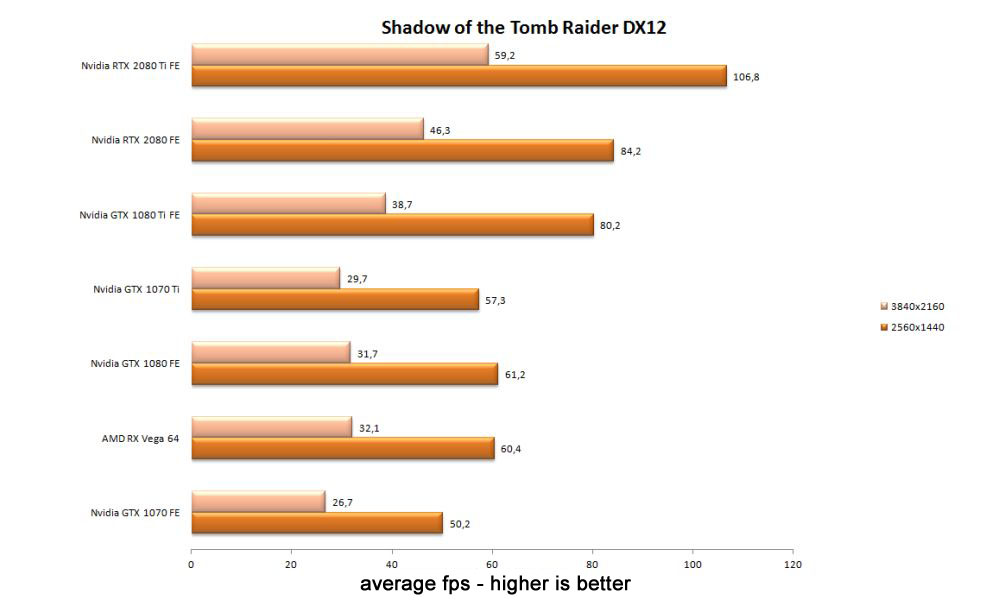

Shadow of the Tomb Raider is one of the games that will eventually use Nvidia RTX Ray Tracing for shadows as well as bring support for DLSS, both coming at a later date.

As you can see from the table below, the Geforce RTX 2080 hits 84fps at 1440p and solid 46fps average frame rate at 2160p resolution. The Geforce RTX 2080 Ti can easily hit an average of well over 100fps at 1440p and just under 60fps at 2160p resolution. Of course, you can further tweak the graphics settings but for comparison sake, we were sticking to highest settings in DirectX 12 mode.

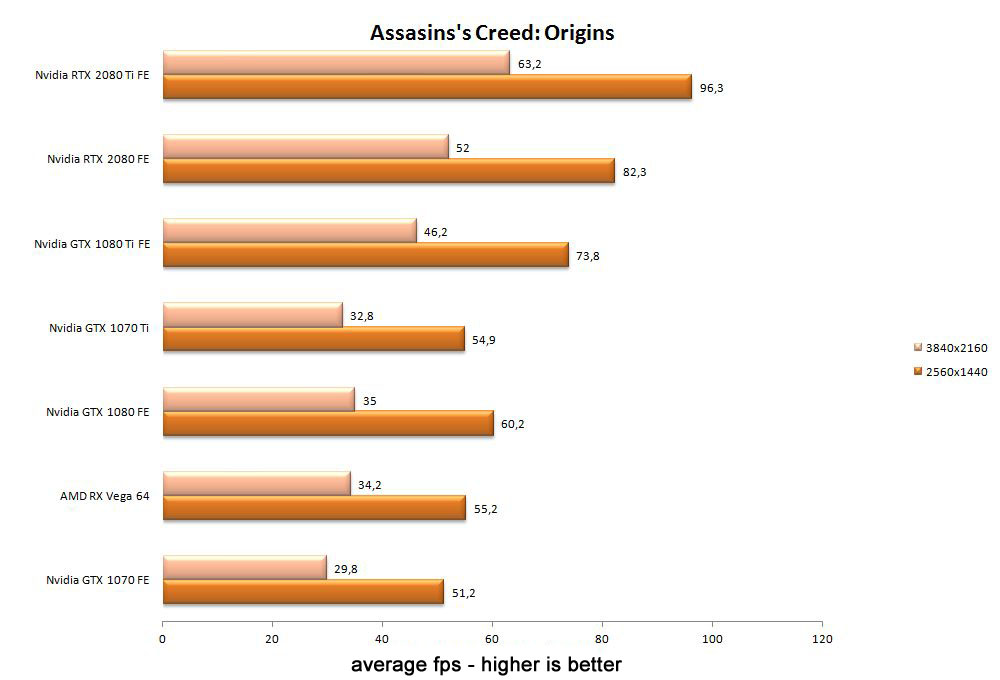

Ubisoft’s Assassin’s Creed: Origins is quite heavy on the GPU and the previous generation was far from bringing over 60fps at 2160p resolution and you could barely hit 60fps at 1440p on the Geforce GTX 1080.

The Geforce RTX 2080 Ti hits over 90fps at 1440p and gives over 60fps average at 2160p resolution. The lower amount of CUDA cores on the Geforce RTX 2080 was enough for 52fps at 2160p and 82fps at 1440p.

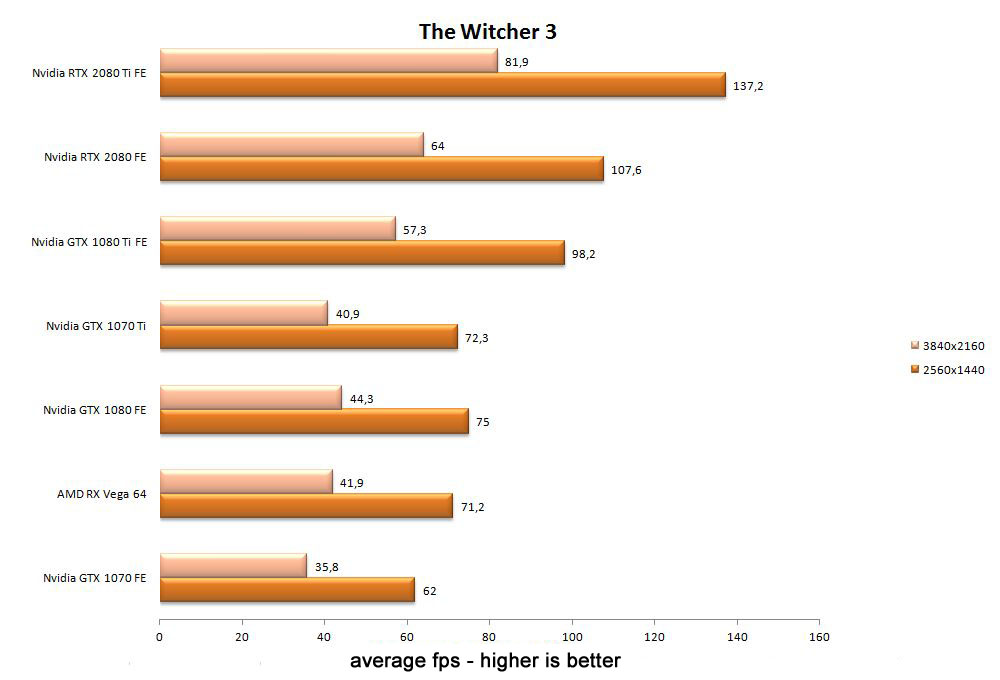

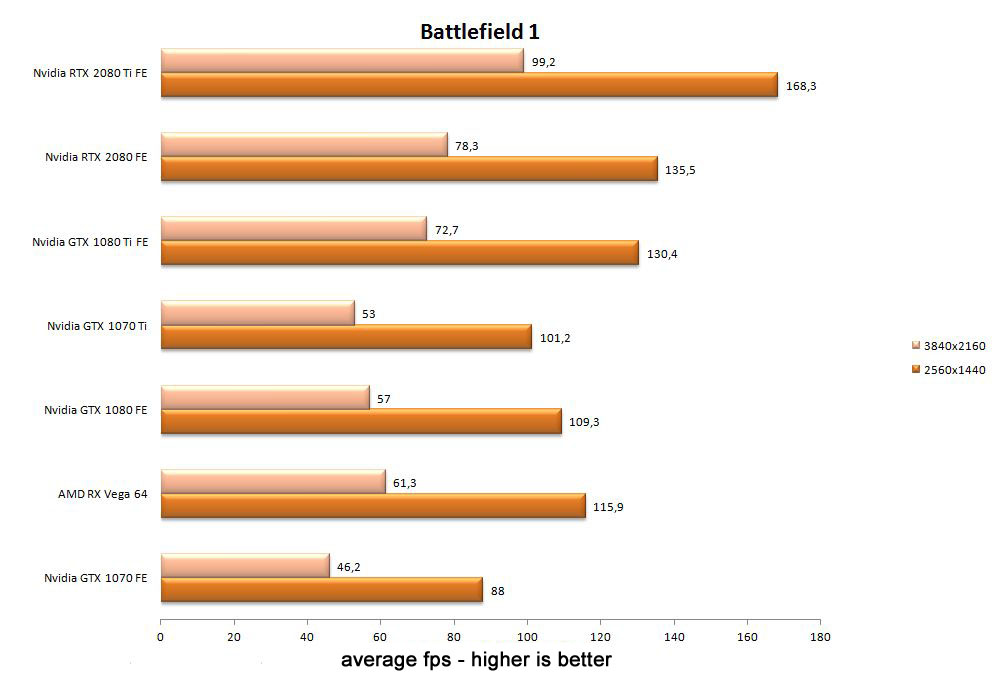

The Witcher 3, Battlefield 1

The Witcher 3 is still one of the most beautiful games we played recently and it does not come as a surprise that it can put a lot of pressure on even the new RTX-series, but these new graphics cards managed to get a decent score, providing an average of over 60fps at 2160p resolution. Of course, Nvidia Hairworks was disabled.

The RTX 2080 Ti hit over 80fps at 2160p resolution, and over 130fps at 1440p. We did try 1080p as well and we were getting well over 170fps. The RTX 2080 is enough for just above 60fps at 2160p and for over 100fps at 1440p.

As we wait for Battlefield V, the Battlefield 1 is the next best thing from EA/DICE, and RTX series had no trouble in taking care of this DirectX 12 title, especially at higher resolutions.

The RTX 2080 Ti hits 99.2fps at 2160p resolution and 168.3fps at 1440p. Even the RTX 2080 does well at 78.3fps at 2160p and 135.5fps at 1440p resolution. The Radeon RX Vega 64 does well in Battlefield 1, even beating the GTX 1080.

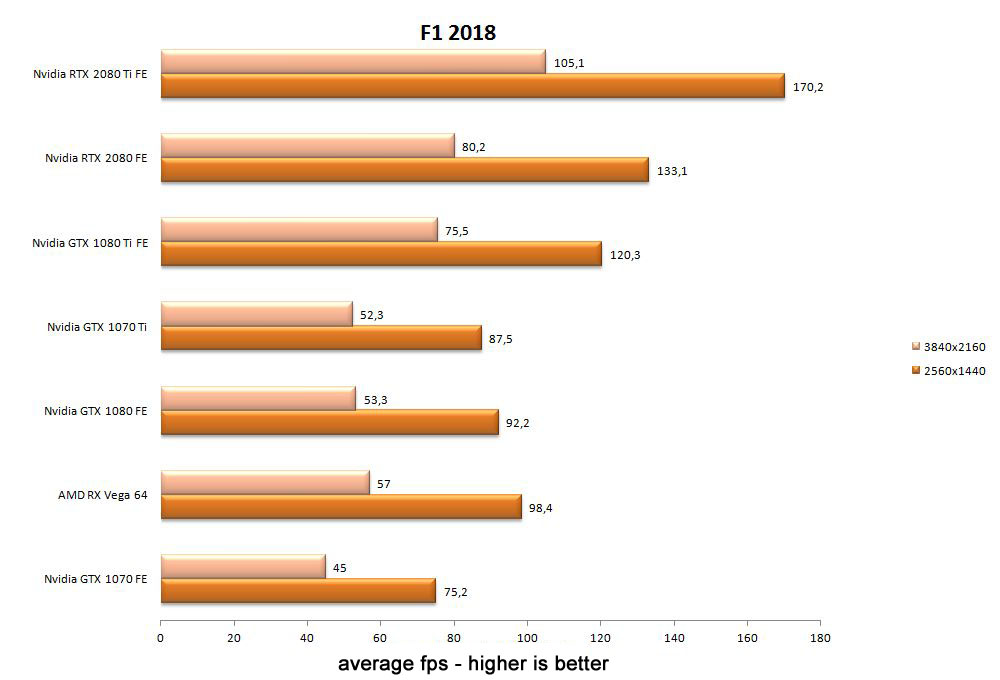

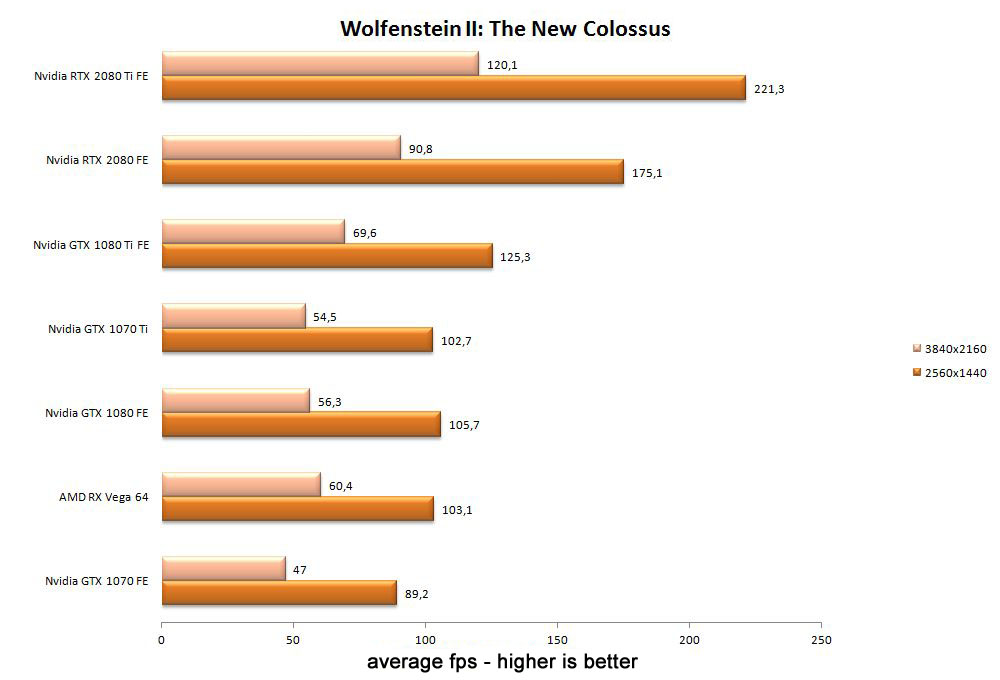

F1 2018, Wolfenstein II: The New Colossus

Codemaster’s F1 2018 is probably one of the best racing simulations around, at least if you are into Formula 1, and while it still sticks to DirectX 11, it puts decent pressure on the hardware.

The RTX 2080 Ti hit over 100fps at 2160p and over 170fps at 1440p resolution while the RTX 2080 was enough just over 80fps at 2160p and over 130fps at 1440p.

Wolfenstein II: The New Colossus mostly comes to our list as it supports Vulkan API, and it usually did quite well on AMD Radeon graphics cards but RTX-series brings a whole new level of performance leaving everything in the dust.

The RTX 2080 Ti hits well over 120fps at 2160p resolution and over 220fps at 1440p, which is an incredible result, especially considering that GTX 1080 Ti was hitting just under 70fps at 2160p and a bit over 120fps at 1440p.

The RTX 2080 also does well, pushing over 90fps at 2160p and over 170fps at 1440p.

Power consumption, temperatures and overclocking

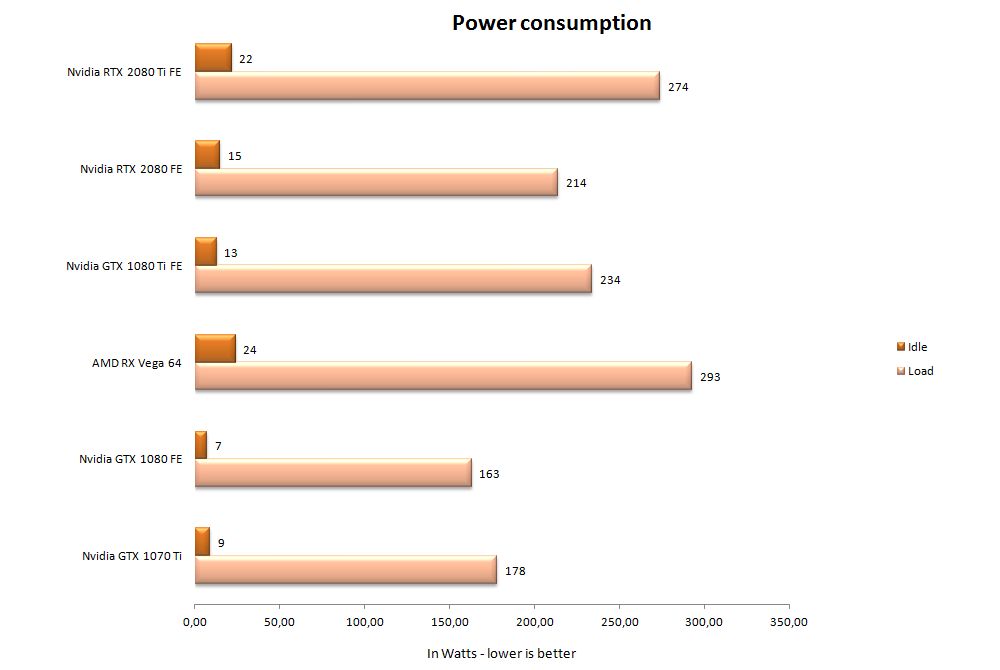

Even before we got to test the power consumption of the RTX-series, we were notified that there could be some issues with idle power consumption so we weren’t surprised when we hit those, but thankfully, Nvidia is promising a quick fix via driver update soon.

As you can see from the table below, the RTX 2080 Ti pulls around 20W in idle, while the RTX 2080 is somewhat better, but not ideal, hitting just above 14W. In normal gaming, you can see a power draw of anything between 270W to 290W for the RTX 2080 Ti, and between 210W and 230W for the RTX 2080.

This is a bit more than the GTX 1080/1080 Ti but when you consider the performance per Watt, both the RTX 2080 Ti and the RTX 2080 are doing quite well, better than both the competition and the previous high-end GTX 10-series.

We did not get to check out temperatures in detail, but we did have a quick look showing that the RTX 2080 Ti hits around 37 degrees in idle and around 82 degrees under normal load in a closed case environment. The RTX 2080 pretty much sticks to the same 36 degrees idle but has a lower load temperature of around 73 degrees.

This did not come as a big surprise considering the new dual axial-fan cooling solution with big vapor chamber. While we did not have time to take the RTX series apart, there are plenty of sites that did, showing a well-built cooler on Founders Edition RTX 2080/2080 Ti.

We also did not do a lot of overclocking due to time constraints so we will be looking at it more closely in future, especially the new one-click overclocking that Nvidia was so proud when RTX-series was announced.

We will update the article as we get more detailed results but so far, automatic overclocking works well.

Conclusion

Now we get to the hard part, as the RTX-series and the Turing architecture can be seen from a few different angles, but all leading to the same bottom line.

With Ray Tracing or hybrid rendering, as Nvidia calls it, the Turing architecture is pushing game developers in the right direction, promising some big advancements in graphics, both visual and performance alike.

Ray Tracing and Deep Learning aside, the RTX-series pushes the performance bar to a whole new level, with the RTX 2080 Ti easily outperforming the Titan V.

Both the RTX 2080 Ti and the RTX 2080 can be considered as true single-GPU 4K/UHD (2160p) gaming graphics cards. Of course, the RTX 2080 Ti will probably be closer to that title than the RTX 2080, but the RTX 2080 holds its ground well, especially considering the difference in price. The RTX 2080 has enough power to outperform the GTX 1080 Ti, and we do not even want

Of course, even the RTX 2080 Ti won’t get you 60fps+ at highest possible settings in all games, as some are better optimized and some are still too demanding, even for the RTX 2080 Ti, but it is certainly the first to get even close to running some games over 60fps at 2160p resolution at such graphics settings.

Of course, those looking for a higher fps for those high refresh rate monitors, the RTX 2080 Ti is the first one that will do that easily, and with significant eye-candy enabled, even at 4K/UHD.

As we said a multiple of times, there is always an ugly side to every story, and the new Nvidia RTX-series is no exception.

The biggest is the price, and while Nvidia is partly to blame, it is rather understandable, as the company currently competes with its own products. The lack of competition has led to this and, unfortunately, we doubt it will get better anytime soon. At $999 for the reference and $1199 for the Founders Edition, the RTX 2080 Ti is significantly more expensive than the GTX 1080 Ti, and even the RTX 2080, which comes with an MSRP of $699 for the reference version and $799 for the Founders Edition, is not on the cheap side.

The new features that were introduced with the Turing architecture, like the RTX Ray Tracing and DLSS take time to implement and while it sounds great, we are now limited to a few demos. Don’t get us wrong, it is only a current drawback as plenty of developers are eagerly hopping on both the RTX Ray Tracing and the DLSS trains, so the RTX-series should only get better, both visually and performance-wise.

Nvidia also did a great job with its Founders Edition series, and while it did put some pressure on Nvidia AIC partners, the Founders Edition has never been better, even with a significant price premium compared to the reference version. The temperature is incredibly low, the build quality is exceptional, and it is very quiet, at least as far as we could tell without detailed testing.

Those looking to buy a premium product do not mind paying a higher a price and while we would have liked for it to be lower, it is just how the market works. Apple has been doing it for years and at least Nvidia offers a decent set of features as well as higher (and the highest) performance money can buy.

Hopefully, the pricing will come down a bit and in the meantime, Nvidia is still the performance king and it is pushing the gaming developers in the right direction, so the future is definitely bright on that side of things. If you are looking for the best money can buy, the RTX series is definitely the way to go.