Index

- Nvidia Geforce GTX 1080 Ti review

- GPU and memory overview

- Specifications, packaging and hardware overview

- Test Setup

- Results – Nvidia Vulkan API demo

- Results – Cinebench R15

- Results – Civilization VI

- Results – Just Cause 3

- Results – No Man's Sky

- Results – Far Cry 4

- Results – 3DMark + Time Spy

- Results – Unigine Heaven 4.0 Benchmark

- Overclocking

- Conclusion

- All Pages

The most advanced consumer GPU gets over a 40 percent price cut

Nvidia is one of those companies in Silicon Valley that always has one more trick up its sleeve prior to announcing a new roadmap for its consumer and enterprise product lines. In the case of Geforce Pascal, it has now managed to introduce a new flagship that not only surpasses the previous flagship’s performance in every category except for compute (check), but it has also lowered the price by nearly 60 percent.

During the company’s Editors Day event in San Francisco in late February, press and industry representatives were given an inside look into some further architectural improvements and performance tweaks that the company was able to bring to its Geforce GTX 1080, which was previously priced between $599 and $699 depending on the model. Up until this week, that card sat right beneath Titan X Pascal at $1200, but has now been preempted by a new contender in town called the Geforce GTX 1080 Ti. As a result of the new flagship product launch, along with incoming competition from AMD in the form of a new 14-nanometer Vega RX series lineup, Nvidia has now decided that the best approach for attracting professional gamers is not one where the higher-priced product has more performance “weight” than cheaper alternatives, but rather one that adopts supply-side economics – that is, by lowering barriers to the purchase of more premium hardware options. It has now decided to cut the price of the GTX 1080 to $499 in an effort to prepare more PCs for content rich, VR-ready experiences with framerates more closely resembling the panel refresh rates of modern 4K and 5K displays.

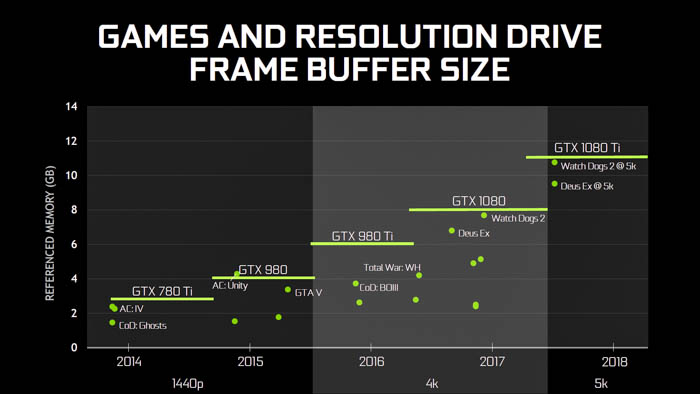

As many investors in the industry note, the biggest driver of gross profit margins is usually the competitiveness of products in the marketplace. According to analyst Handel Jones, the cost of producing Nvidia’s 16-nanometer wafers in 2016 was relatively 19 percent cheaper than the cost of producing previous 28-nanometer wafers two years prior. This is likely one reason the company chose to discontinue its Geforce GTX 980 Ti in favor of the GTX 1080 last June. While the Titan X pulled through most benchmarks by a significant margin of at least 15 percent over the GTX 1080, there was at least a $500 price gap between the two cards that was not easily justified for those users not requiring framebuffers above 8GB for their display setups. This gap was also larger than the $350 price gap between the previous GTX 980 Ti and first-gen GTX Titan X in 2014 and 2015. But sure enough, over the course of the past twelve months the gaming industry saw the release of at least two major titles that required framebuffers above this threshold – Watch Dogs 2 and the latest Deus Ex installment. Playing these and similar games at 2160p and higher resolutions has only been possible for those brave enough to invest in at least one or two Titan X Pascal cards, until now.

Geforce GTX 1080 Ti

With the announcement of AMD’s new Vega series on the horizon for Q1 2017, it was only a matter of time before Nvidia was willing to close the price gap between its two topmost cards and bring enthusiast-grade 4K/5K performance to the masses. So late February, it announced the Geforce GTX 1080 Ti at $699, while simultaneously slashing the price of the current GTX 1080 to $499. In addition, it decided to reintroduce the GTX 1080 with an improved 11Gbps GDDR5X memory speed, and reintroduce the GTX 1060 with an improved 9Gbps GDDR5 memory speed. These models will be available within the next couple of weeks.

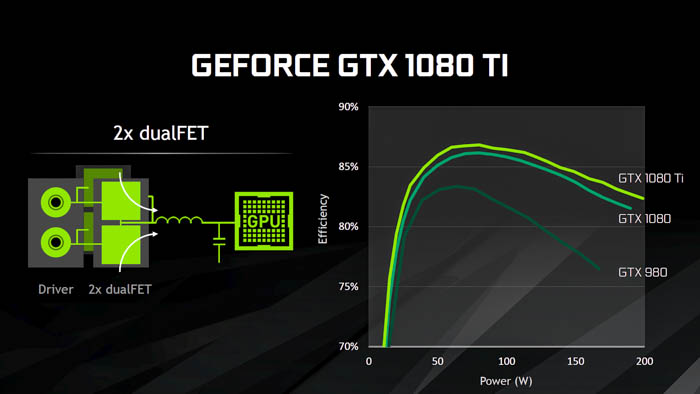

Since the introduction of Pascal back in April 2016, Nvidia has decided that improvements to power design and efficiency were going to be the forefront of this generation’s product releases. This architecture has brought many performance optimizations including an improved delta color compression engine that not only improves efficiency, but also provides improved overall memory bandwidth. And with the GTX 1080 Ti, the company was able to add even more efficiency using a dualFET architecture for even lower noise distortion. The result is a card that is not only 35 percent faster than the GTX 1080, but over 47 percent faster than the previous generation GTX 980 Ti.

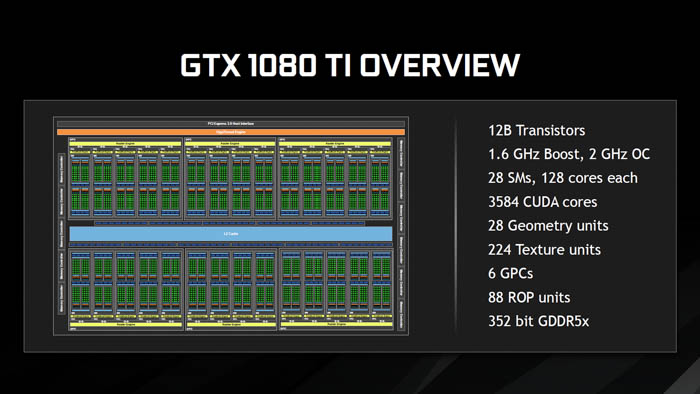

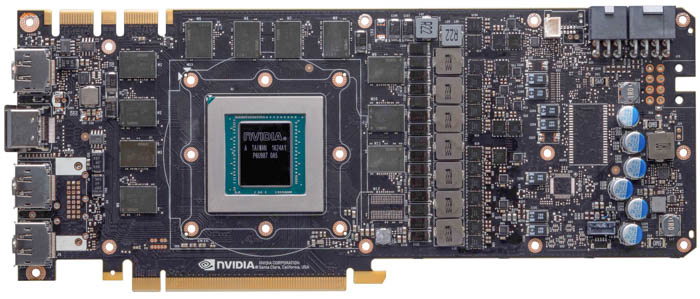

GP102-350

The Geforce GTX 1080 Ti ships with a GP102-350 under the hood, rather than GP102-400 on the Titan X, but features the same number of cores – 3584 – and the same number of texture units – 224. However, the number of ROPs gets decreased from 96 to 88. Each core contains 28 streaming multiprocessors (SMs), and each SM contains four double-precision (DP) units each. To account for the decrease in ROPs, the core clock has been increased to 1480MHz versus 1417MHz on the Titan X, yielding a texture fill rate of 354.4 gigatexels per second, or about a 62 percent increase over the previous flagship card and 85 percent higher than the GTX 980.

Improved memory architecture

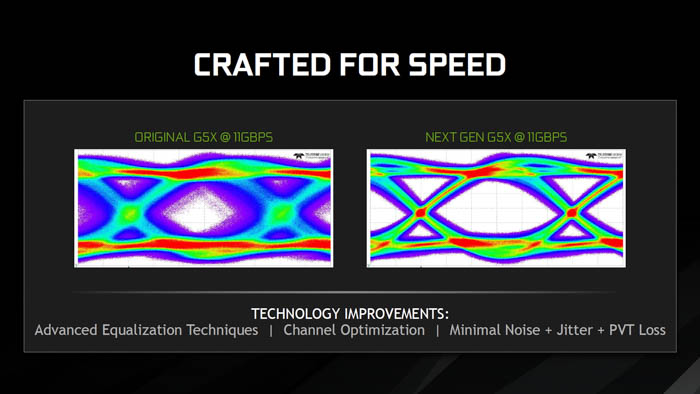

During its press briefing, senior GPU architect Jonah Alben went on stage to discuss the GTX 1080 Ti’s improved memory architecture and how it now uses next-generation GDDR5X modules from Micron running at 11Gbps. This has been achieving using a technique called “advanced equalization” that allows for a "much cleaner signal" consisting of minimal noise, less jitter and less power, temperature and voltage (PVT) loss.

The above image shows two sampled data eye diagrams of the memory interface, where the left image shows the original GDDR5X running at 11Gbps and the right shows the redesigned GDDR5X modules with a much cleaner “data eye.” At this bandwidth speed, the original memory design was not able to separate data values reliably in the center of the image is where the values are sampled. The company was thus able to decrease the bus width from 384-bits on the Titan X Pascal to 352-bits while also gaining a speed increase from 10Gbps to 11Gbps, and peak memory bandwidth increase from 480GB/s to 484GB/s.

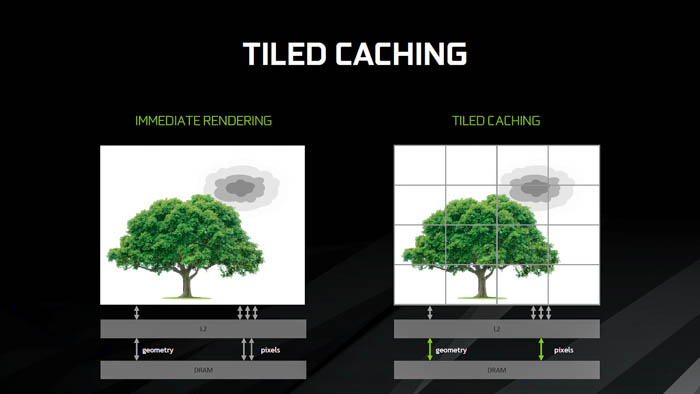

Alben explained that most modern GPUs use a technique called “immediate rendering” – you get triangles to come into the scene, transform them, and immediately push them to the back end of the chip. In terms of memory bandwidth, geometry is immediately read through the L2 cache and then processed in DRAM. Alternatively, there is a technique called “tiled rendering” that divides the triangles into large individual tiles that substitute L2 cache for a “tile buffer” that sends several squares at a time in parallel directly to DRAM. The drawback is that this approach has a downside for high-performance gaming because geometry is read, sent back to memory, and then read again. With the goal of reducing bandwidth as much as possible, the tiling approach cannot stand up to the pixel-dense bandwidth requirements of modern content.

In the previous Maxwell generation, the company’s engineers designed a technique called “tiled caching” to improve cache locality and reduce memory traffic. This approach still renders a single portion of the screen at a time, but now it sends triangles through an on-chip geometry queue called a “binner.” Once this queue fills up, the contents are sent out to the Rasterizer in a tiled way. “In the case where tiling is a bad idea, we don’t use it. When tiling is a good idea, we use it.”

Nvidia says that tiled caching can be described as an enhancement “intermediate architecture” that doesn’t pay the latency tax of having to go through memory multiple times in a single rendering process. The technique uses L2 cache coherency, which is another way of saying that the contents of multiple caches remain together and consistent. On the front-end, there is no difference in the geometry processing – all of the reductions in pixel bandwidth are now done on the back-end, and this is where pixels will stay until the rendering is complete.

In terms of raw memory performance with a redesigned GDDR5X controller, the GTX 1080 Ti is able to surpass HBM2’s peak bandwidth-per-package rate of 256GB/s by about 64 percent, to 400GB/s. Using compression techniques alone, Nvidia is able to increase the amount of memory bandwidth from 400GB/s to 900GB/s, or about 2.25 times. By combining compression with tiled caching, it can now bring that 900GB/s number all the way up to 1200GB/s, or another 30 percent.

Framebuffer requirements now being met for most premium displays

In 2014, the average size of the VRAM framebuffer at the introduction of some of the first 4K-ready graphics cards was between 3.5GB and 4GB. Most 1080p titles only required 2GB at most, while titles such as GTA V running at 1440p required around 3.5GB. As ultra-wide 21:9 monitors came into mainstream retail channels, this requirement soon increased to 5.27GB, while 4K and 5K have pushed the numbers up to 7.91GB and 14.06GB, respectively.

Two years ago, the Maxwell-based Titan X became the first consumer card to feature a 12GB framebuffer, meeting the requirements for most displays in UHD resolution and above. AMD soon realized the importance of meeting these framebuffer requirements and introduced the Radeon R9 390 and 390X the following summer with 8GB of GDDR5 memory each. Nvidia’s lineup was refreshed by the Pascal-based Titan X with 12GB of GDDR5X, along with the GTX 1070 and GTX 1080 with 8GB of GDDR5 and GDDR5X, while AMD followed up with Polaris-based Radeon RX 470 and 480X featuring 8GB of GDDR5 each, respectively.

Now in Q1 2017, the company has decided that it will take the 12GB GDDR5X framebuffer previously only available in its $999 to $1200 flagship Titan X series, and offer it to a larger customer base thanks to the lower $699 point of the Geforce GTX 1080 Ti. Not only will the capacity for more pixel transfer be increased, but the speed of data has also been optimized to 11Gbps at the lower effective price point, making this card a more ideal way to save on the purchase price of these new premium UHD displays.

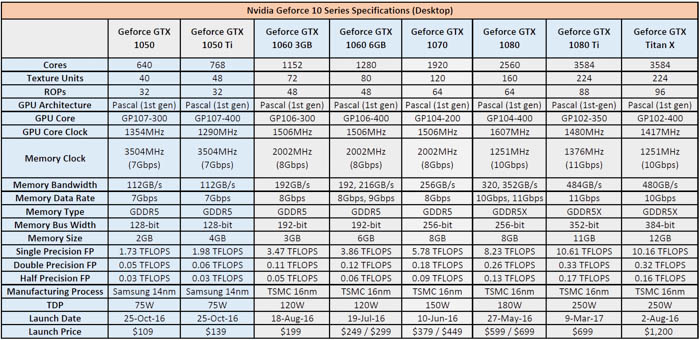

GTX 1080 Ti specifications

Here is a quick look of how the Geforce GTX 1080 Ti stacks up to Nvidia’s existing Pascal-based graphics card lineup. Where the Geforce GTX 1080 features 2,560 cores, 160 texture units and 64 ROPs, the GTX 1080 Ti increases these to the equivalent of the Titan X – 3,584 cores and 224 texture units – with the exception of 88 ROPs instead of 96. The core clock has also been increased to 1,480MHz, the Boost clock is now 1,584MHz, and the memory clock is 1,376MHz (11Gbps effective).

Geforce 10 Series desktop specifications (Larger image here)

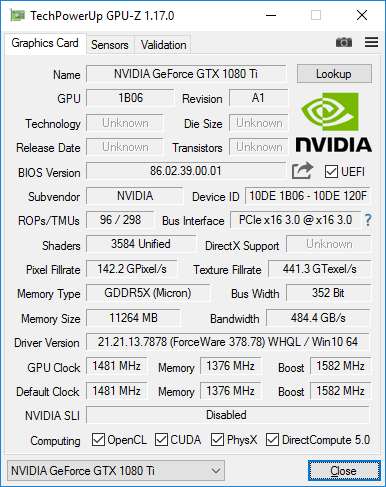

According to GPU-Z, the card features a pixel rate of 130.2GP/s, a texture rate of 332GT/s, and single-precision floating point performance just above the Titan X Pascal at 10.61 GFLOPS.

The card itself measures 10.5 inches long by 4.38 inches tall and comes in a dual-slot configuration similar to the GTX 1070 and GTX 1080. The card draws a maximum of 220 watts of power over a 6-pin PCI-E and an 8-pin PCI-E power connector and connects to a PCI-E 3.0 slot on a motherboard with room for a dual-slot configuration.

Packaging and hardware overview

The GTX 1080 Ti Founders Edition box features the same squared, rectangular shape style used for all of Nvidia’s performance cards, including the GTX 1060, 1070 and 1080. Upon opening the package, the GPU is neatly fitted inside a foam cut out that props up the card to display its side profile.

Behind the GPU is a smaller rectangular package featuring a heavy-duty Nvidia Simula DisplayPort 1.2 to Single-Link DVI Adapter (PN: 030-0851-000).

Behind the rectangular package is an envelope labeled "Welcome to GeForce GTX Gaming," which features the GeForce GTX 1080 Ti Support Guide, the Quick Start Guide, and a Special-Edition "GeForce GTX" case badge that the company has advised can only be applied once - so pick a good spot on the external part of your case for best results.

The Quick Start guide essentially advises users of minimum system requirements - 8GB of system memory (16GB recommended), a motherboard with at least one dual-width PCI-E 3.0 x16 slot available, and a 600W or higher power supply with one 8-pin and one 6-pin connector. More importantly, the Support Guide encourages users to register their card online through the company's website to gain priority access to Customer Care in the event that warranty service needs to be accessed.

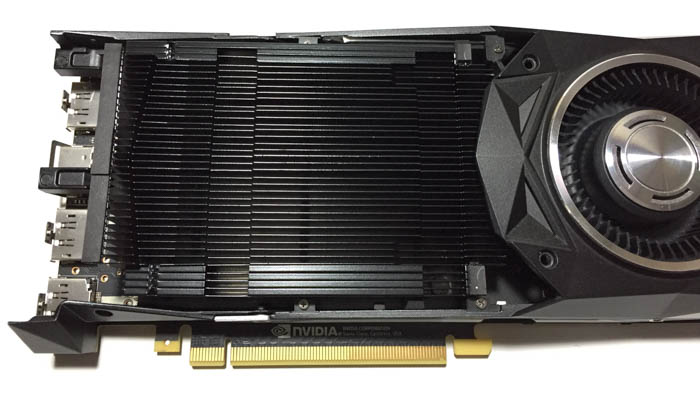

The card itself looks nearly identical to the GTX 1070 and GTX 1080 Founders Edition, with the exception of one small detail in display connectivity ports (more below).

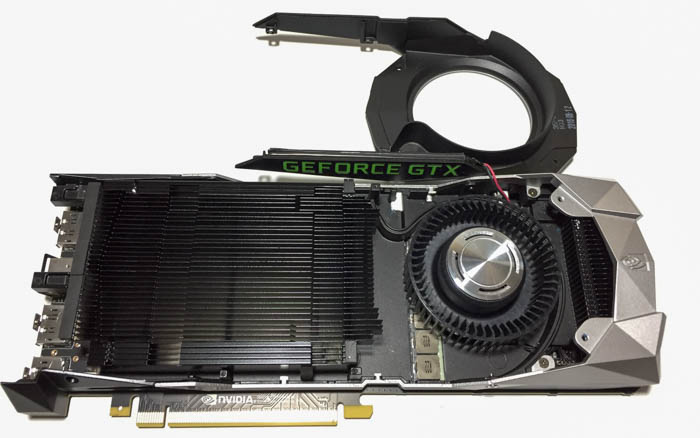

Under the hood of the Founders Edition shroud is a sight very similar to what we discovered with the GTX 1070 Founders Edition last year, including a metal shroud design with vapor chamber cooling and a VRM blower fan, and a large alloy heatsink under the shroud. Removing the shroud is a two-step process that requires unscrewing the polygon-shaped aluminum housing and then carefully lifting out a plastic cover plate from the center. Beneath the heatpipe is the GP102-350 GPU, along with a total of eight Micron GDDR5X memory modules placed around it in a circular manner. Of course, the back of the card also includes a free backplate for additional heat dissipation, something which Nvidia has been doing since its Founders Edition debut last summer.

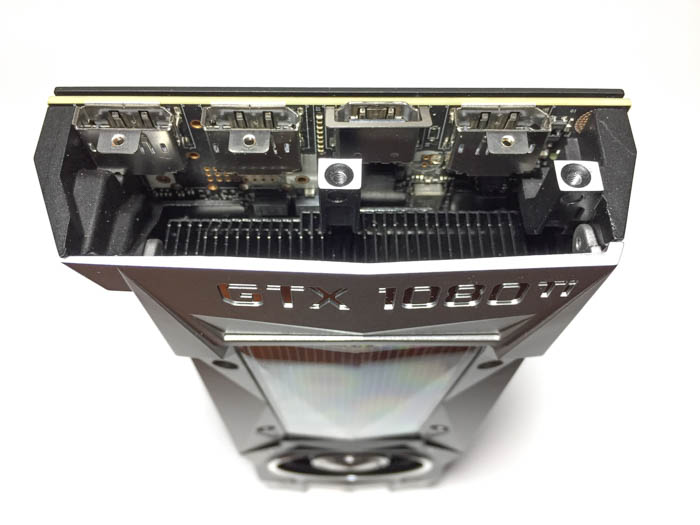

Away with the DVI-D port, only DP and HDMI now

As for connectivity ports, Nvidia has finally done away with the DVI-D connection that has been prevalent in computer graphics cards and displays since 1999, replacing it with a total of three DisplayPort 1.3 (1.4 Ready) ports and a single HDMI 2.0 port. This is essentially the same configuration as the GTX 1060, 1070, 1080 and Titan X Pascal, minus the DVI-D port. It is for this reason that the company now recommends using the Simula DP 1.2 to DVI adapter included in the box.

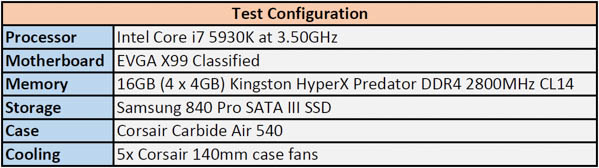

Test setup

For this particular review, we are testing the Geforce GTX 1080 Ti on a system running an Intel Core i7 5930K at 3.70GHz, an EVGA X99 Classified motherboard, 16GB of Kingston HyperX Predator DDR4 2800MHz memory, an EVGA SuperNOVA 750 G2 PSU, and a Corsair Carbide Air 540 gaming case. The system was operating in a room temperature environment with the side panels off. The CPU was cooled using a Thermalright TRUE copper heatsink with two Noctua fans, while the case was cooled with five Corsair case fans.

All benchmarks were completed using Geforce 378.78 drivers on Windows 10 Pro x64 version 1607 with an LG 27UD68 IPS 3840x2160p monitor. Aside from GTX 1080 Ti Founders Edition that Nvidia sent for review, we have included three other cards into the mix for comparison – an EVGA Geforce GTX 1080 FTW Hybrid Gaming, a Geforce GTX 1070 Founders Edition, and an EVGA Geforce GTX 970 SC ACX 2.0.

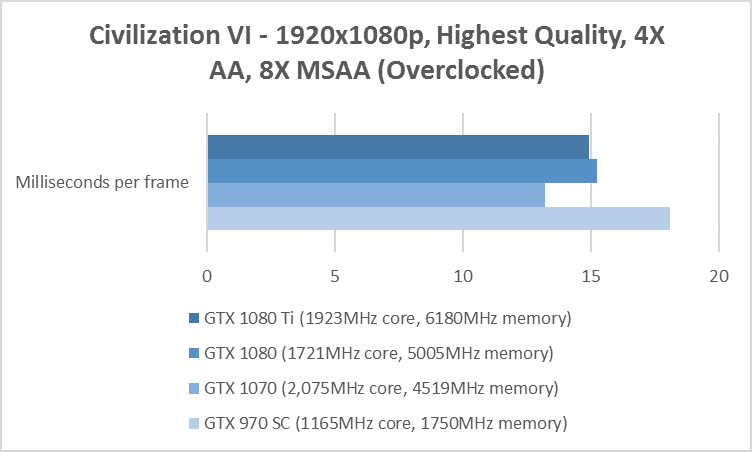

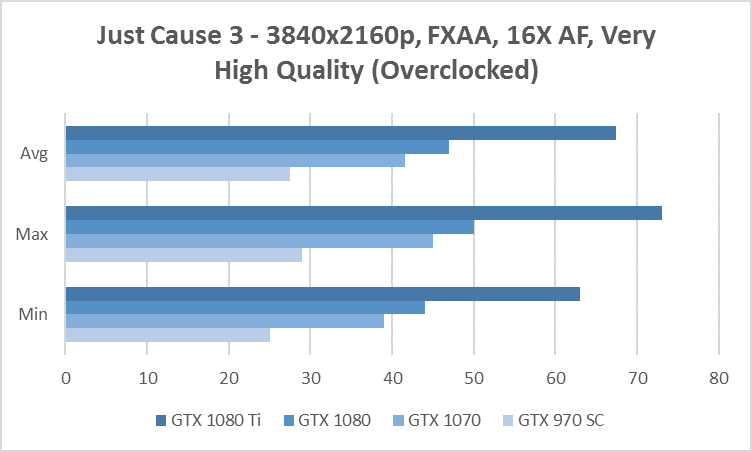

Two passes – base clock vs overclocked specs

In the first run, all cards in the Geforce 10 series were clocked down to default specifications, while the Geforce 9 series card (GTX 970 SC) remained at a slight 11 percent overclock. In the second run, all cards were overclocked – the GTX 970 SC at 11 percent, the GTX 1070 at 38 percent (2075MHz core, 4519MHz memory), the GTX 1080 FTW Hybrid at 7 percent (1721MHz core, 1860MHz memory), and the GTX 1080 Ti at 30 percent (1923MHz core, 6180MHz memory).

For our software benchmarking tests, we used the Nvidia Vulkan API demo called ThreadedRenderingVk that simulates an aquarium full with schools of fish, along with Cinebench R15.038 (OpenGL), Unigine Heaven Benchmark (DX11), and Futuremark’s 3DMark with Time Spy (DX12).

For our gaming benchmark tests, we tried to pick a decent selection of DirectX 12 titles to fully make use of the asynchronous compute capabilities of these cards. These include Civilization VI, Battlefield 1 and Just Cause 3 (certain features operating under DX 11.3). For comparison, our two reference titles include Far Cry 4 (DirectX 11) and No Man’s Sky (OpenGL).

Nvidia Vulkan API demo – Fishsploshion (ThreadedRenderingVk)

Last June, Lars Bishop from the Nvidia GameWorks developer community released a threaded rendering sample using the Vulkan 1.0 API that demonstrates how to render enormous amounts of animation data in multiple threads. In this case, the ThreadedRenderinVk demo consists of an aquarium filled with fish that can be sorted by number of schools and roam distance, allowing a user to use render a high number of moving objects with minimal draw cells. A number of buffers handle fish location and direction, making this a fantastic display that really ought to be included as a Windows desktop screensaver sometime soon.

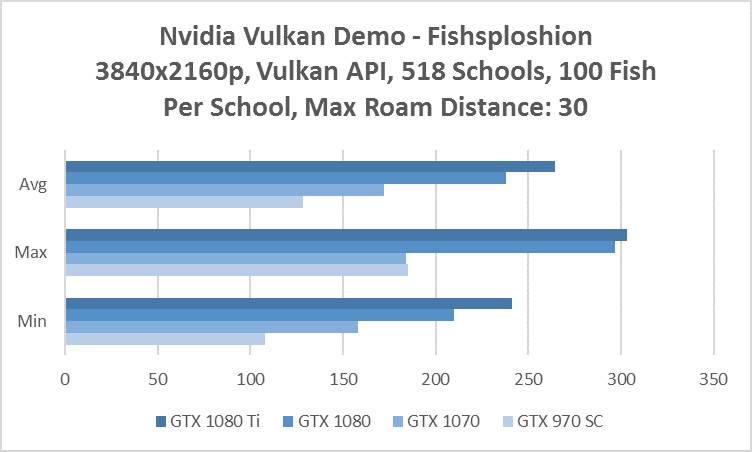

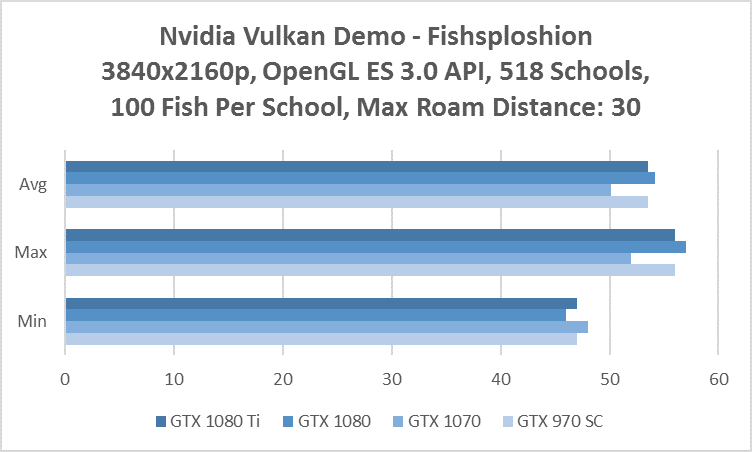

The demo allows operation in both Vulkan mode and OpenGL ES 3.0 mode, making it a great performance comparison between Nvidia’s newer API and its predecessor. For our purposes, we ran this test in a maximized window at 3840x2160p (4K) resolution, using a sizable 518 schools of fish with 100 fish per school, and a maximum roam distance of 30.

Based on our results, the Vulkan API was able to score surprisingly high with an average of 264.2 frames per second on the Geforce GTX 1080 Ti, followed by 237.85 on the GTX 1080, 172.3 on the GTX 1070, and 128.4 on the GTX 970 SC.

Meanwhile, the OpenGL ES 3.0 API has begun to show its age in this multi-threaded demo, with an average of 53.6 frames per second on the GTX 1080 Ti, followed by 54.2 on the GTX 1080, 50.1 on the GTX 1070, and 50.6 on the GTX 970 SC.

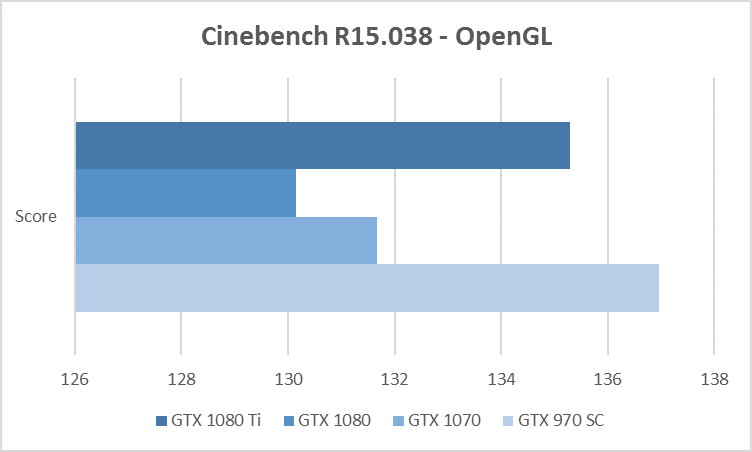

Cinebench R15.038 – OpenGL

Cinebench is a popular synthetic benchmark that measures CPU and GPU using OpenGL. The program uses several algorithms to render a photorealistic 3D scene containing over 300,000 polygons and then will display the results based on a point system.

Based on our results, the GTX 970 SC scores 136.97 points, followed by the Geforce GTX 1080 Ti at 135.29 points, the GTX 1070 at 131.68fps, and the GTX 1080 at 130.15 points. For this test, it should be noted that there can be some variability with each run, and all four cards seem to score consistently above the 130-point marker. This is more of a suggestion to Cinebench to include a more comprehensive benchmark that makes use of a more robust shading algorithms.

Civilization VI

Sid Meier’s turn-based strategy games have been around since 1991 and have set a great example for the “4X” game genre – “Explore, Expand, Exploit and Exterminate.” Just like its predecessors, the game centers around building a custom civilization that can scale from prehistory to near future empires, encouraging the player to become a world power and achieve one of several victory outcomes through either military, technological, or diplomatic leadership, etc. Civilization: Beyond Earth supported AMD’s Mantle, the predecessor to Vulkan and DirectX 12, while the latest Civilization VI update from November now brings support for DirectX 12. New capabilities include asynchronous compute, explicit multi-adapter (multi-GPU rendering using split-frame technique), and of course new multiplayer map types including a 50-turn scenario called “Calvary and Cannonades.”

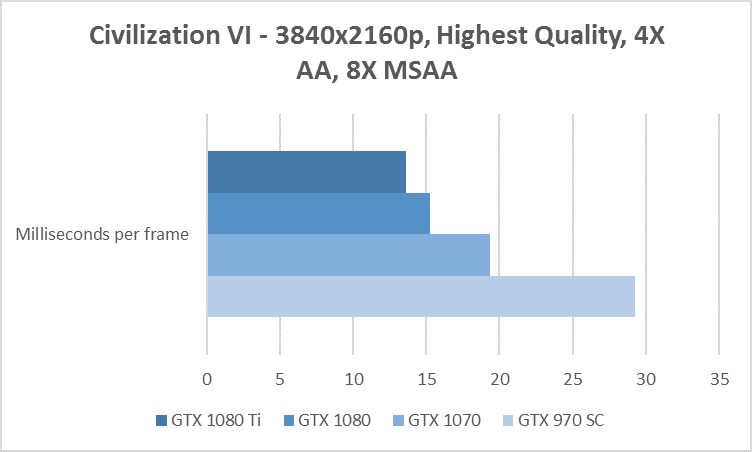

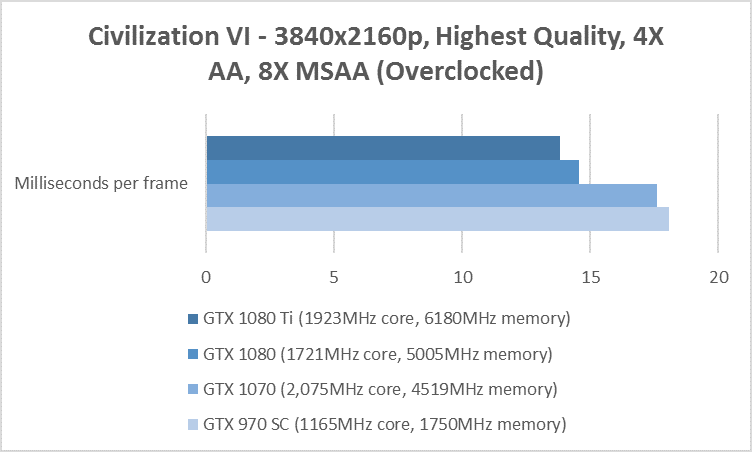

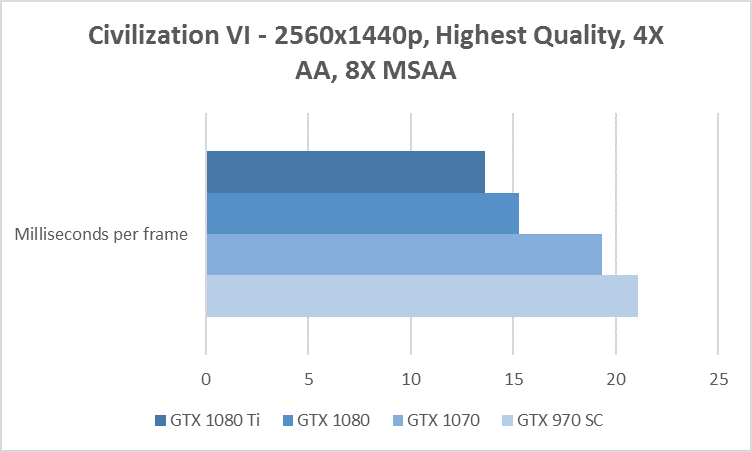

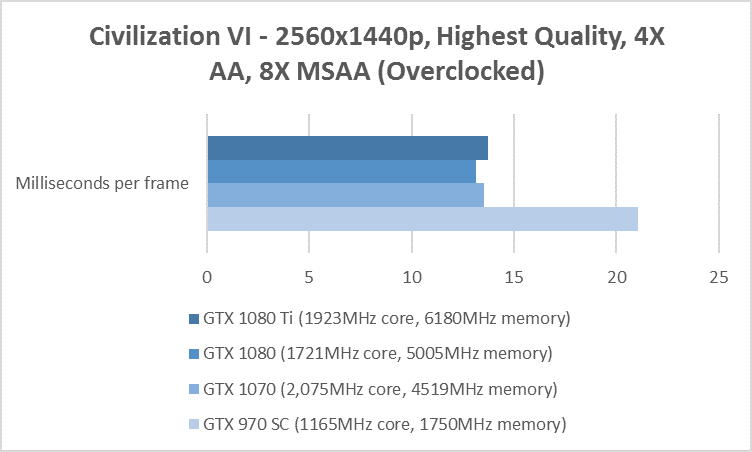

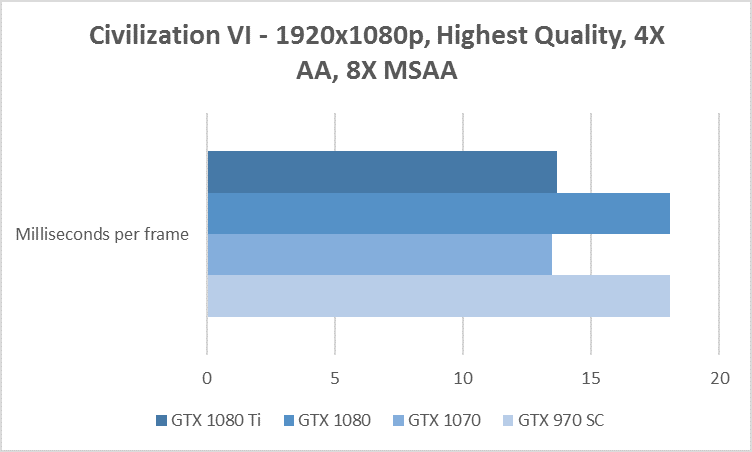

For this test, we are using the game’s built-in Benchmark utility, which outputs the results as milliseconds that pass between each frame. The lower number here is the better result.

Results – 3840x2160p

In the default clock results at 4K, the Geforce GTX 1080 Ti scores 13.641ms, followed by the GTX 1080 at 15.284ms, the GTX 1070 at 19.335ms, and the GTX 970 SC at 29.265ms.

In the overclocked results at 4K, the Geforce GTX Ti scores 13.82ms, followed by the GTX 1080 at 14.556ms, the GTX 1070 at 17.617ms, and the GTX 970 SC at 29.265ms.

Results – 2560x1440p

In the default clock results at 1440p, the Geforce GTX 1080 Ti scores 13.565ms, followed by the GTX 1080 at 13.155, the GTX 1070 at 13.617ms, and the GTX 970 SC at 21.07ms.

In the overclocked results at 1440p, the Geforce GTX 1080 Ti scores 13.743ms, followed by the GTX 1080 at 13.124ms, the GTX 1070 at 13.524ms, and the GTX 970 at 21.07ms.

Results – 1920x1080p

In the default clock results at 1080p, the Geforce GTX 1080 Ti scores 13.693ms, followed by the GTX 1080 at 13.198, the GTX 1070 at 13.484ms, and the GTX 970 SC at 18.077.

In the overclocked results at 1080p, the Geforce GTX 1080 Ti scores 14.922ms, followed by the GTX 1080 at 15.245ms, the GTX 1070 at 13.214, and the GTX 970 SC at 18.077. The results are a bit slower than the default clocks, signaling some slight variability with the game engine.

Just Cause 3

Back in 2006, Avalanche Studios developed an action-adventure game called Just Cause featuring a story of multiple factions fighting for control of a small nation. The player can take control of a variety of side missions to liberate villages and overtake leadership of drug cartels, and the complexity of this feature was much expanded in the second release, Just Cause 2. In the third installment, players now have a variety of new tools to use in the large open-world environment, including a wingsuit to glide between locations with ease, and a more functional grappling hook, along with a wide range of weapons including shotgun RPGs, fighter aircraft, planes, ships, and exotic cars.

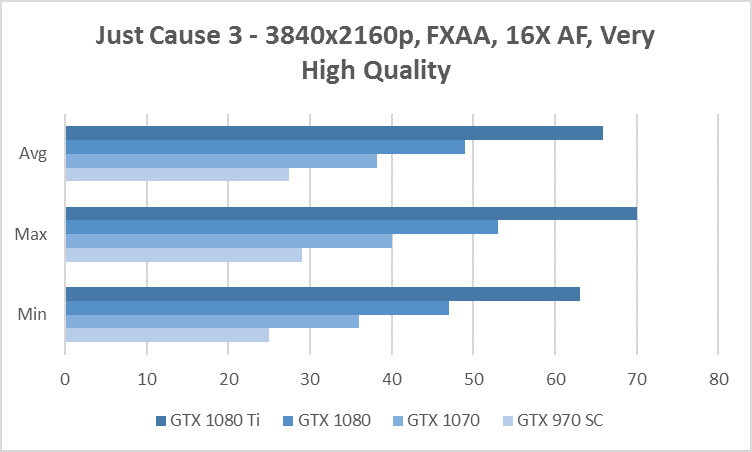

Results – 3840x2160p

In the default clock results at 4K, the Geforce GTX 1080 Ti gets an average of 67.4fps, followed by the GTX 1080 at 49fps, the GTX 1070 at 38.2fps, and the GTX 970 SC at 27.5fps.

In the overclocked results at 4K, the Geforce GTX Ti gets an average of 67.4fps, followed by the GTX 1080 at 43fps, the GTX 1070 at 41.6fps, and the GTX 970 SC at 27.5fps.

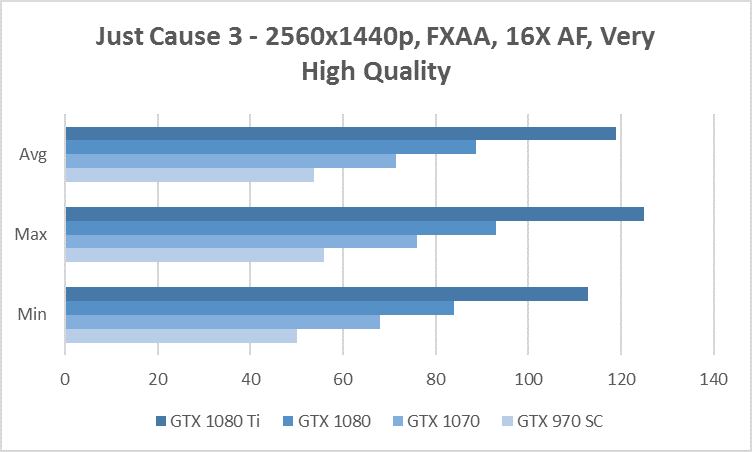

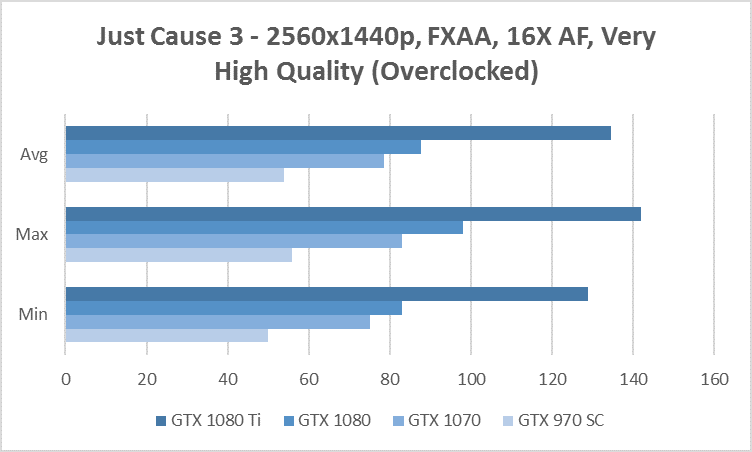

Results – 2560x1440p

In the default clock results at 1440p, the Geforce GTX 1080 Ti gets an average of 119fps, followed by the GTX 1080 at 88.2fps, the GTX 1070 at 71.6fps, and the GTX 970 SC at 53.8fps.

In the overclocked results at 1440p, the Geforce GTX 1080 Ti gets an average of 134.7fps, followed by the GTX 1080 at 87.6fps, the GTX 1070 at 78.6fps, and the GTX 970 SC at 53.8fps.

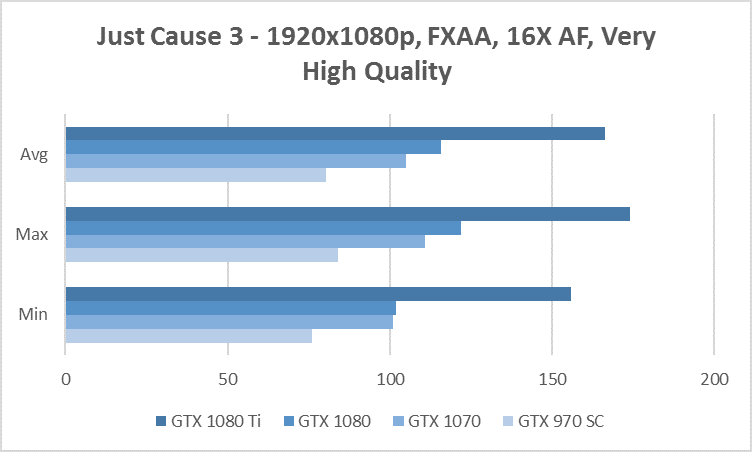

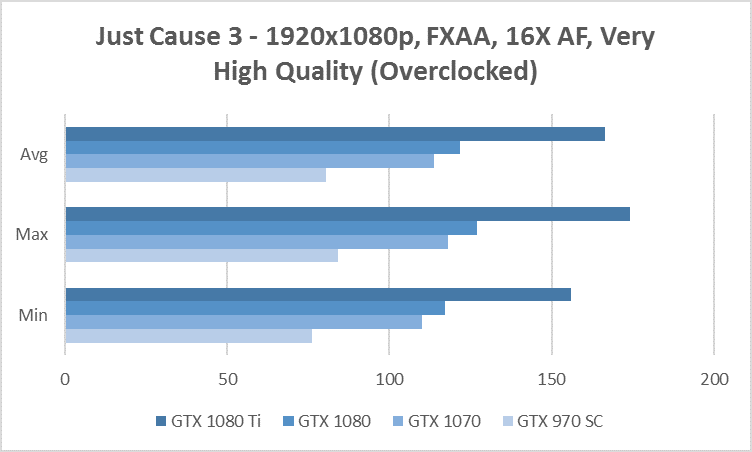

Results – 1920x1080p

In the default clock results at 1080p, the Geforce GTX 1080 Ti gets an average of 159.4fps, followed by the GTX 1080 at 116fps, the GTX 1070 at 105fps, and the GTX 970 SC at 80.4fps.

In the overclocked results at 1080p, the Geforce GTX 1080 Ti gets an average of 166.4fps, followed by the GTX 1080 at 121.7fps, the GTX 1070 at 113.8fps, and the GTX 970 SC at 80.4fps.

No Man’s Sky

When No Man’s Sky made its debut in August 2016, it received mixed reception from critics who both praised the technical elements of its procedurally-generated universe, and were concerned that some of its advertised features were not available in the final game. Some have considered it to have a similar impact on the game industry as Minecraft did several years back, though others noted that it was burdened with “expectations” from the start. Regardless of a critic’s opinion of the met and missed expectations of a highly explorational, universe-sized sandbox, the game consumes a sizable amount of system resources and is nevertheless a great reference point for benchmarking the latest graphics cards using the OpenGL API.

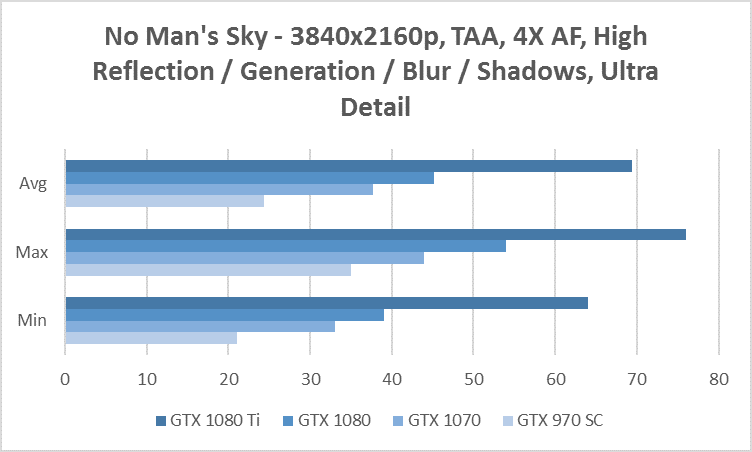

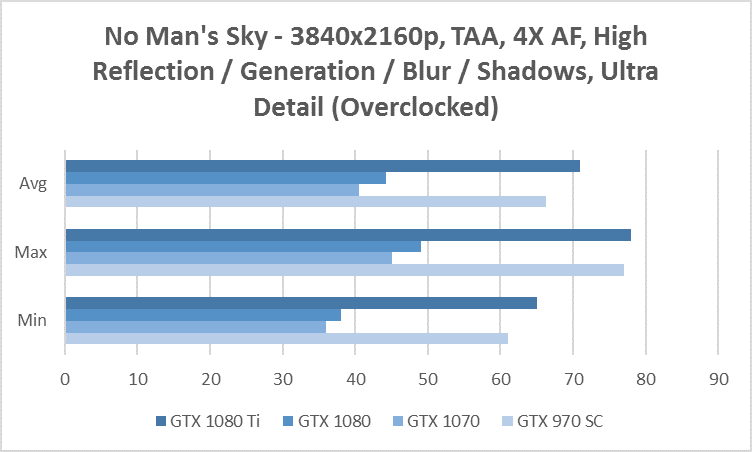

Results – 3840x2160p

In the default clock results at 4K, the Geforce GTX 1080 Ti gets an average of 69.4fps, followed by the GTX 1080 at 45.2fps, the GTX 1070 at 37.7fps, and the GTX 970 SC at 24.4fps.

In the overclocked results at 4K, the Geforce GTX 1080 Ti gets an average of 70.9fps, followed by the GTX 1080 at 44.3fps, the GTX 1070 at 40.5fps, and the GTX 970 SC at 24.4fps. Not much of a difference here between the two clockspeed sets.

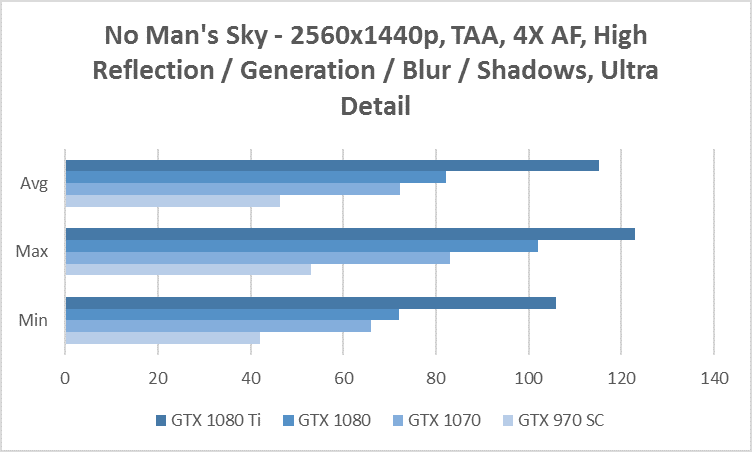

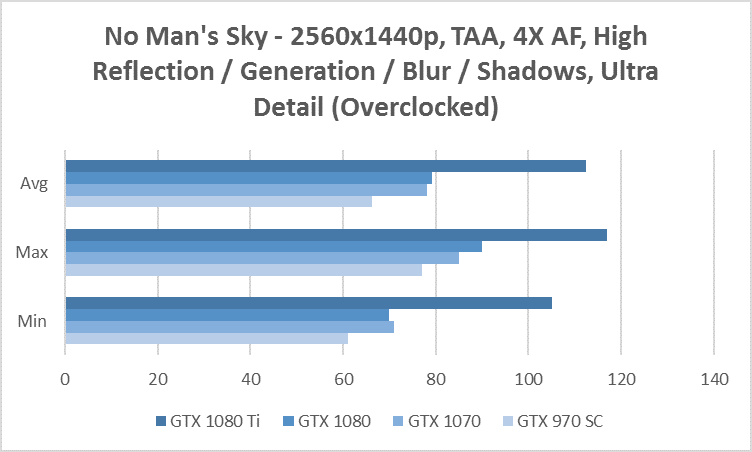

Results – 2560x1440p

In the default clock results at 1440p, the Geforce GTX 1080 Ti gets an average of 115.2, followed by the GTX 1080 at 82.1fps, the GTX 1070 at 72.2fps, and the GTX 970 SC at 46.3fps.

In the overclocked results at 1440p, the Geforce GTX 1080 Ti gets an average of 112.4fps, followed by the GTX 1080 at 79.1fps, the GTX 1070 at 78.1fps, and the GTX 970 SC at 46.3fps. Only the GTX 1070 sees a difference in this run, though possibly due to some procedurally-generated variability.

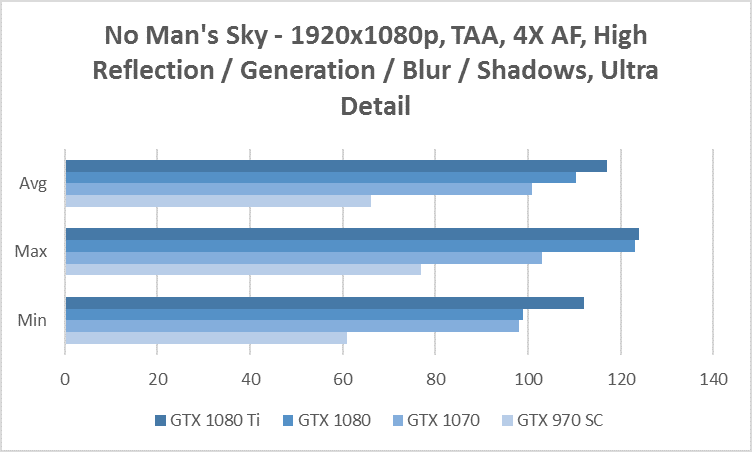

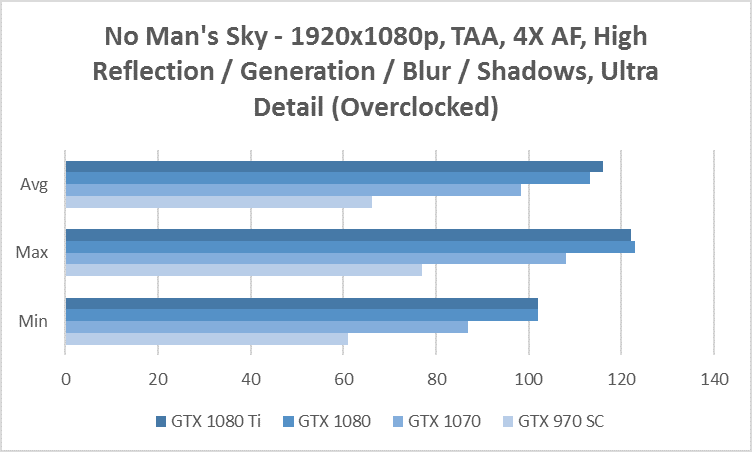

Results – 1920x1080p

In the default clock results at 1080p, the Geforce GTX 1080 Ti gets an average of 117.1fps, followed by the GTX 1080 at 110.4fps, the GTX 1070 at 100.9fps, and the GTX 970 SC at 66.2fps.

In the overclocked results at 1080p, the Geforce GTX 1080 Ti gets an average of 115.9fps, followed by the GTX 1080 at 113.3fps, the GTX 1070 at 102.2fps, and the GTX 970 SC at 66.2fps. Again, there is not much of a difference to be made when overclocking, at least for this game.

Far Cry 4

The Far Cry franchise has been around since 2004 when Crytek developed a commercial success of the first game in the series, leading to its acquisition by Ubisoft, and the eventual development of three sequels and six spin-offs all developed by Ubisoft Montreal. The latest installment in the series is another open world, action adventure first-person shooter that takes the player through the mountains and forests of fictional Himalayan country Kyrat to battle some seriously dangerous wildlife, and occasional enemy soldiers along the way. The game is based on Dunia Engine 2 and runs using the DirectX 11 API, making use of new ambient occlusion methods along with a wide range of complex geometry in rock formations and woodlands, foliage and fur shaders.

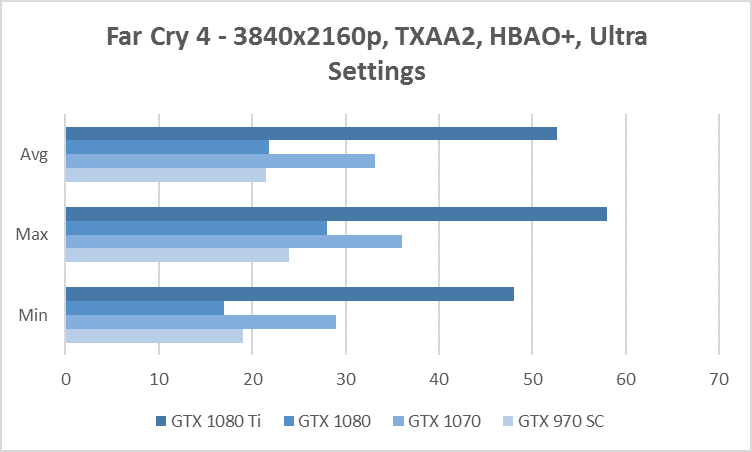

Results – 3840x2160p

In the default clock results at 4K, the Geforce GTX 1080 Ti gets an average of 33.2fps, followed by the GTX 1070 at 33.2fps, the GTX 970 SC at 21.5fps, and the GTX 1080 at 21.2fps. In this test, the GTX 1080 seems to perform lower than the GTX 1070, likely due to some temperature throttling as a result of a very warm radiator unit.

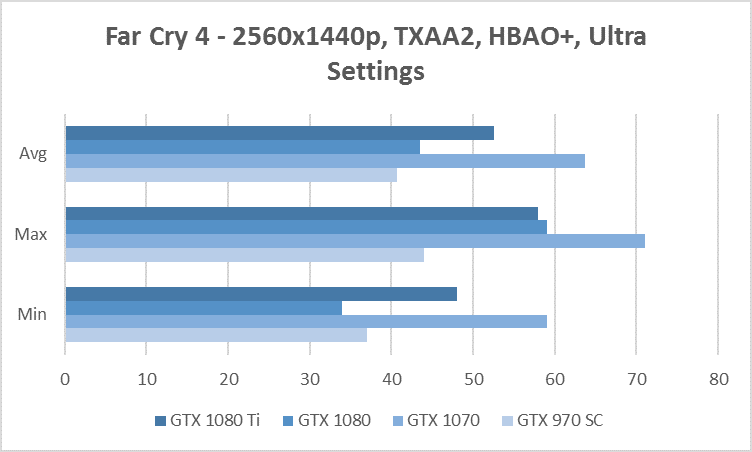

Results – 2560x1440p

In the default clock results at 1440p, the Geforce GTX 1080 Ti gets an average of 94.3fps, followed by the GTX 1070 at 63.7fps, the GTX 1080 at 43.8fps, and the GTX 970 SC at 40.7fps. Once again, it appears that the GTX 1080 has trailed behind the 1070, and the only reasonable answer we can guess is because the card was running exceptionally hot and had to be thermally throttled down.

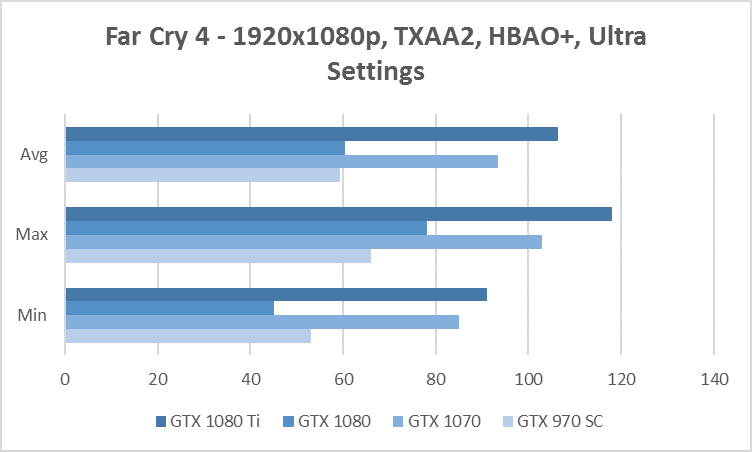

Results – 1920x1080p

In the default clock results at 1080p, the Geforce GTX 1080 Ti gets an average of 106.4fps, followed by the GTX 1070 at 93.5fps, the GTX 1080 at 60.3fps and the GTX 970 SC at 59.4fps. It appears the GTX 1080 was throttled down once again due to high temperatures. Next time we will make sure to give the FTW Hybrid a proper mounting using the included fan rather than a Corsair case fan.

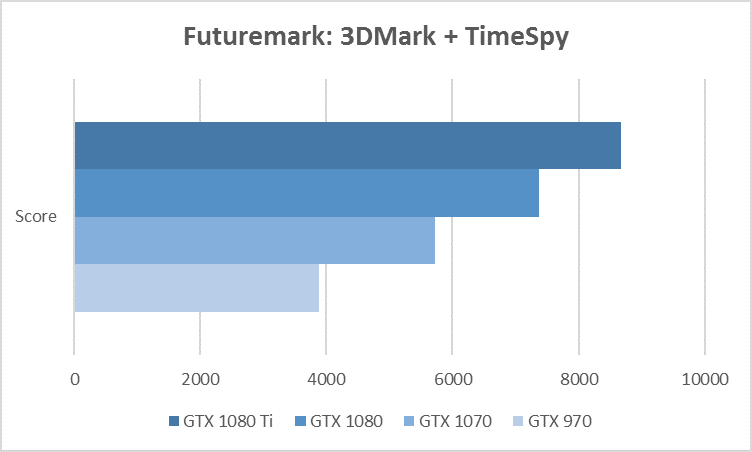

Results – 3DMark + Time Spy

Futuremark has been at the forefront of PC benchmarking since 1998, when it released one of the first 3D benchmarks aimed directly at the 3D gaming community. The company has since developed its software releases based on successive releases of the Microsoft DirectX API, allowing it to bring in a variety of more complex geometric and shading renderers into the mix with each successive release. Now with the latest development 3DMark, the company has added a number of DirectX 11 feature tests to benchmark 4K-ready gaming PCs, along with a new DirectX 12 feature test called Time Spy with support for asynchronous compute, explicit multi-adapter, and multi-threading.

In our Time Spy benchmark results at the default 1440p resolution, the Geforce GTX 1080 Ti gets a score of 8,678, followed by the GTX 1080 at 7,454, the GTX 1070 at 5,724, and the GTX 970 SC at 3,880. The results show a near-linear progression between each card, though the increase is just a bit smaller between the two top-end cards. We expect the Titan X to be put in a close second place in this test.

Of course, the results contained the usual validation warnings, saying that the card is not recognized and the driver (378.78) is not yet approved. The driver Nvidia sent us for testing was not WHQL certified, though is expected to be the same as the final release.

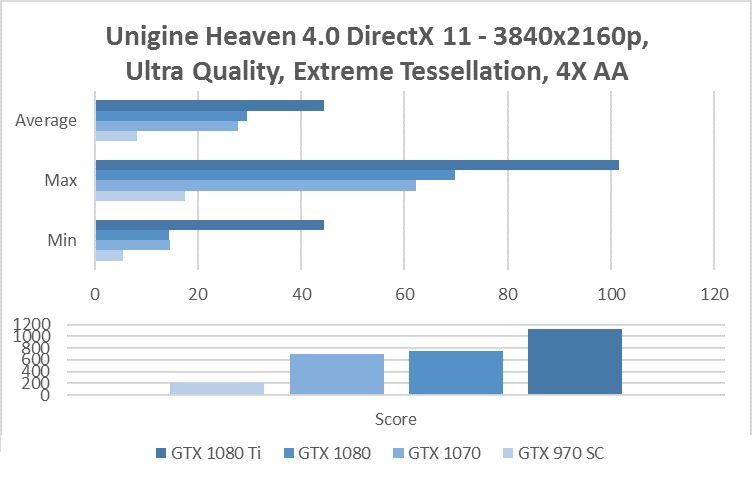

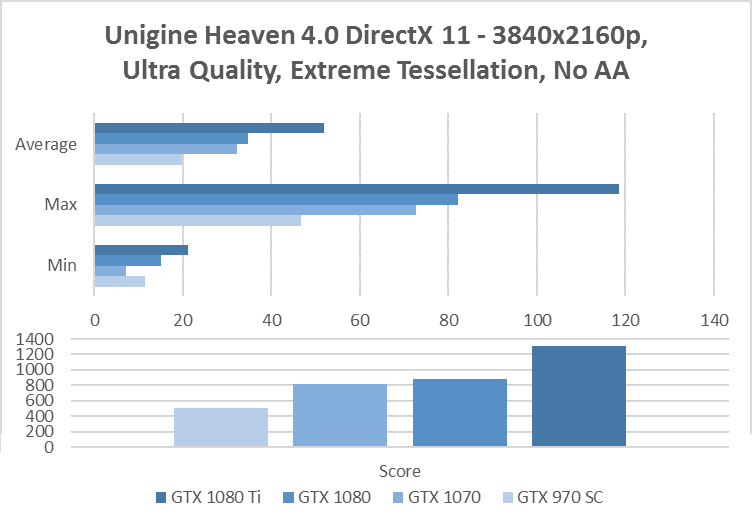

Results – Unigine Heaven 4.0 Benchmark

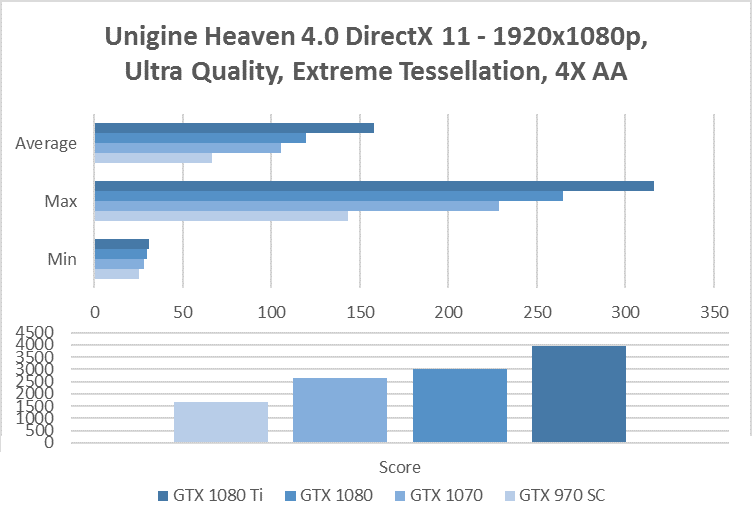

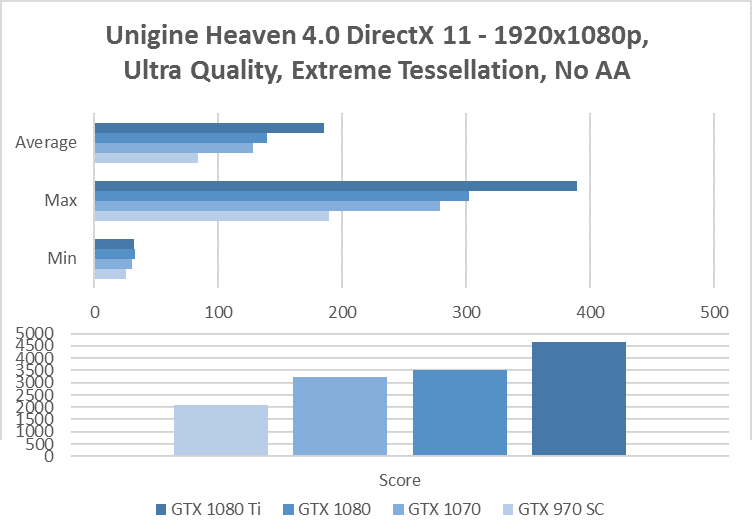

The Heaven Benchmark by Unigine is a tessellation-heavy benchmark that uses DirectX 11 to make many parallel draw calls that still push modern graphics cards to their limits. Features include a dynamic sky with volumetric clouds, real-time global illumination, a cinematic camera flythrough in benchmark mode, and several customizable presets for a convenient comparison of results.

For this test, we are benchmarking one pass with 4X AA enabled and another with AA off.

Results – 3840x2160p

In the 4K test with 4X AA, the Geforce GTX 1080 Ti gets an average of 44.5fps and a score of 1,122, followed by the GTX 1080 at 29.6fps and a score of 746, the GTX 1070 at 27.8fps and a score of 700, and the GTX 970 SC at 8.3fps and a score of 208.

In the 4K test without AA, the Geforce GTX 1080 Ti gets an average of 51.9fps and a score of 1,307, followed by the GTX 1080 at 34.8fps and a score of 875, the GTX 1070 at 32.3fps and a score of 815, and the GTX 970 SC at 19.9fps and a score of 502.

Results – 1920x1080p

In the 1080p test with 4X AA, the Geforce GTX 1080 Ti gets an average of 157.8fps and a score of 3,974, followed by the GTX 1080 at 119.7fps and a score of 3,016, the GTX 1070 at 105.2fps and a score of 2,651, and the GTX 980 SC at 66.4fps and a score of 1,672fps.

In the 1080p test without AA, the Geforce GTX 1080 Ti gets an average of 185.2fps and a score of 4,665, followed by the GTX 1080 at 139.3fps and a score of 3,509, the GTX 1070 at 128.3fps and a score of 3,233, and the GTX 970 SC at 83.2fps and a score of 2,102.

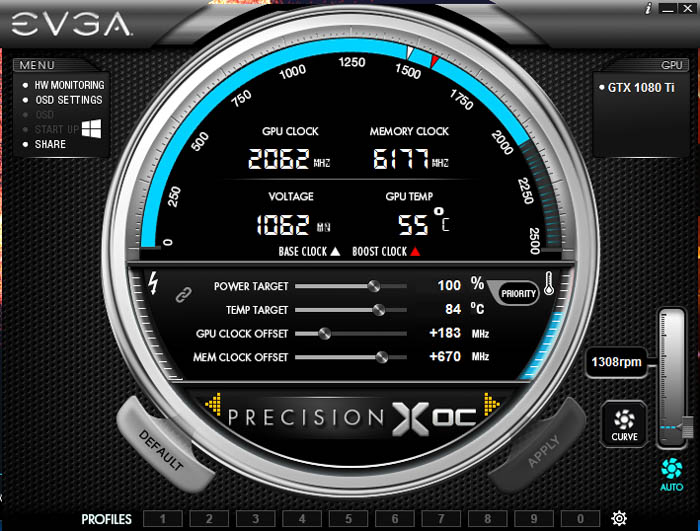

Overclocking

Nvidia’s Pascal cards have shown to have some neat overclocking capabilities since the GTX 1070 and GTX 1080 first appeared on the scene last summer. Those cards are capable of achieving 400 to 500MHz base clock increases right out of the box, and it appears the GTX 1080 Ti is no exception.

After running EVGA Precision XOC 6.0.9, we were able to get the base clock offset up to 180 and 190MHz, and the memory offset up to 675MHz before seeing any screen crashes or lock ups. This was using stock voltages and keeping original fan speed curves. We suspect the company’s retail partners will be able to clock their units around the same or even higher depending on the type of cooling systems involved.

Conclusion – The most advanced consumer GPU gets over a 40 percent price cut

Nvidia has been able to give developers a remarkable tool for maintaining GPU-accelerated workflows and developing applications since CUDA was first released back in 2007. From Fermi to Pascal, consumers have witnessed about a fivefold increase in frames per-second performance with a single card in modern PC games. Now that the advent of 4K gaming has been building for the past two years, it only seemed appropriate to be able to release a high-performance Geforce product at a lower barrier to entry for those requiring the most demanding amounts of pixel and texel fill rates to their displays.

Two years ago, the Maxwell-based Titan X basically became a niche product for hardcore enthusiasts who were curious to even meet the basic memory demands of 4K and higher resolutions, and aside from developers, these customers were few and far in between. Today’s introduction of the Geforce GTX 1080 Ti at a price point of $699, along with the GTX 1080’s price reduction to $499, will ensure that more middle-income folk will be able to experience the latest generation of “The Way It’s Meant to be Played” at considerably higher resolutions without being limited by larger framebuffer requirements.

In the race up to HBM memory for consumer graphics, which often comes at a very high price, Nvidia has been able to provide a more highly efficient alternative in the form of second-generation GDDR5X that uses “tiled caching” to improve communication across multiple caches and reduce memory traffic. With the GTX 1080 Ti, the benefit is a 2.25 increase in memory bandwidth over HBM2, and an additional 30 percent on top of this by combining color compression techniques with tiled caching.

Last year with TSMCs 16nm process, it became cheaper to manufacture the GP104 powering the GTX 1080 than the GM200 that powered the Maxwell-based Geforce GTX 980 Ti and Geforce GTX Titan X. With improvements from a redesigned GDDR5X memory architecture, along with further adjustments to power, temperature and voltage (PVT) loss, Nvidia can now deliver a card that is not only more cost-efficient it to produce, but more power-friendly for the consumer to run in their PCs at home.

Now that the Geforce GTX 1080 Ti has surpassed the Titan X Pascal in price, benchmarks and compute performance, it is possible that the company will announce a second revision during GTC at a lower price point, albeit using a fully-enabled P100 with 60 SMs (3,840 cores) versus the current 56 SMs and 3,584 cores.

Necessary for 3840x2160p above typical monitor refresh rates

For gamers who want the absolute best in class performance without sacrificing quality, framerates or spending more on a graphics card than on the rest of the PC, the Geforce GTX 1080 Ti is capable of providing an average of 65 to 70fps in some of the most demanding titles at Ultra HD resolutions. In Just Cause 3 at 3840x2160p resolution, we are talking about the difference between 67.4fps on the GTX 1080 Ti and 49fps with the standard GTX 1080. In No Man’s Sky at the same resolution, the difference is 69.4fps versus 45.2fps with the GTX 1080. In synthetic benchmarks like Unigine Heaven, the difference between the Geforce GTX 1080 Ti and the standard GTX 1080 can be as much as 51.9fps versus 34.8fps. For these users, there are some much-needed performance gains for those with higher pixel counts. Take advantage of the pricing Nvidia is offering before a new Titan series card is announced, or wait for the competition to announce the full range of details on its upcoming Vega series lineup, the choice is yours.