Nvidia GTC 19 Keynote failed to impress

AI is the elephant in the room

First of all, I want to apologise to all the hard-working people that worked so many hours on the production and material of the keynote, this is not about you. Everyone did a great job, but the content picked was mostly just utterly boring.

IBM gets neural networks to conceptually think

MIT-IBM Watson AI Lab find GANs a powerful tool

Boffins working at MIT-IBM Watson AI Lab have been playing around with GANs, or generative adversarial networks, because they are providing clues about how neural networks learn and reason.

Xilinx acquired DeePhi Tech for AI and ML

Accelerate data center and intelligent edge

Xilinx is getting big on intelligence and adaptive computing and the company just announced that it acquired DeePhi Technology Co., Ltd (DeePhi Tech) company specializes in machine learning, deep compression pruning, and system-level optimization for neural networks. Let’s just sum this up and say that it is good at AI and ML.

Xilinx helped AMD with HBM 2

High bandwidth is the key

There is an interesting story that's very little known about the public cooperation that has happened between AMD and Xilinx about HBM memory.

AMD shows Vega 7nm 32GB HBM2

RayTrace render demo

None other than Lisa Su, the fearless leader of AMD, has announced the Vega 7nm and just as Fudzilla reported a few months back this is a Radeon instinct only and it won’t make it to a gaming part. David Wang the SVP Engineering Radeon Technologies Group went into a few more details.

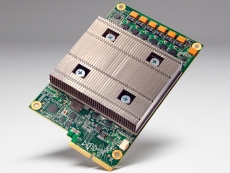

Google makes major leap forward with new Tensor Processing Unit

Accelerator will move Moore’s Law forward by seven years

On Wednesday at Google’s annual I/O developer conference in Mountain View, California, the company went forward and announced a revolutionary new processing accelerator unit for machine learning that is now expected to move a recently-slowing Moore’s Law forward by at least three chip generations, or seven years.