The deputy commissioner, Stephen Bonner said that it was the first time the regulator has issued a blanket warning on the ineffectiveness of new technology, said but one that is justified by the harm that could be caused if companies made meaningful decisions based on meaningless data.

Bonner said none of this tech was backed by science and the watchdog was worried that a few organisations looking into these technologies as possible ways to make pretty important decisions.

While using using emotional analysis technology isn’t a problem, it should not be seen as anything more than entertainment.

“There are plenty of uses that are fine, mild edge cases … if you’ve got a Halloween party and you want to measure who’s the most scared at the party, this is a fun interesting technology. It’s an expensive random number generator, but that can still be fun.

“But if you’re using this to make important decisions about people – to decide whether they’re entitled to an opportunity, or some kind of benefit, or to select who gets a level of harm or investigation, any of those kinds of mechanisms … We’re going to be paying very close attention to organisations that do that. What we’re calling out here is much more fundamental than a data protection issue. The fact that they might also breach people’s rights and break our laws is certainly why we’re paying attention to them, but they just don’t work.

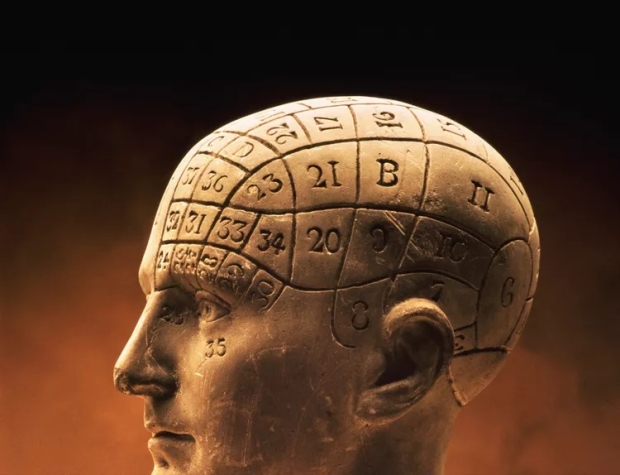

“There is quite a range of ways scientists close to this dismiss it. I think we’ve heard ‘hokum’, we’ve heard ‘half-baked’, we’ve heard ‘fake science’. It’s a tempting possibility: if we could see into the heads of others. But when people make extraordinary claims with little or no evidence, we can call attention to that.”

But others are more fundamental: the regulator has warned that it is difficult to apply data protection law when technology such as gaze tracking or fingerprint recognition “could be deployed by a camera at a distance to gather verifiable data on a person without physical contact with any system being required”. Gathering consent from, say, every single passenger passing through a station, would be all but impossible.

In spring 2023, the regulator will be publishing guidance on how to use biometric technologies, including facial, fingerprint and voice recognition. The area is particularly sensitive, since “biometric data is unique to an individual and is difficult or impossible to change should it ever be lost, stolen or inappropriately used”.