As noted by Nvidia, the GH200-powered systems join more than 400 system configurations powered by different combinations of Nvidia's latest CPU, GPU, and DPU architectures, including Nvidia Grace, Hopper, Ada Lovelace, and BlueField.

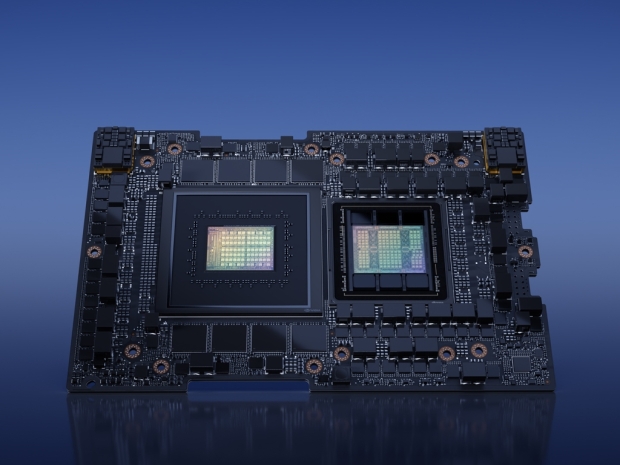

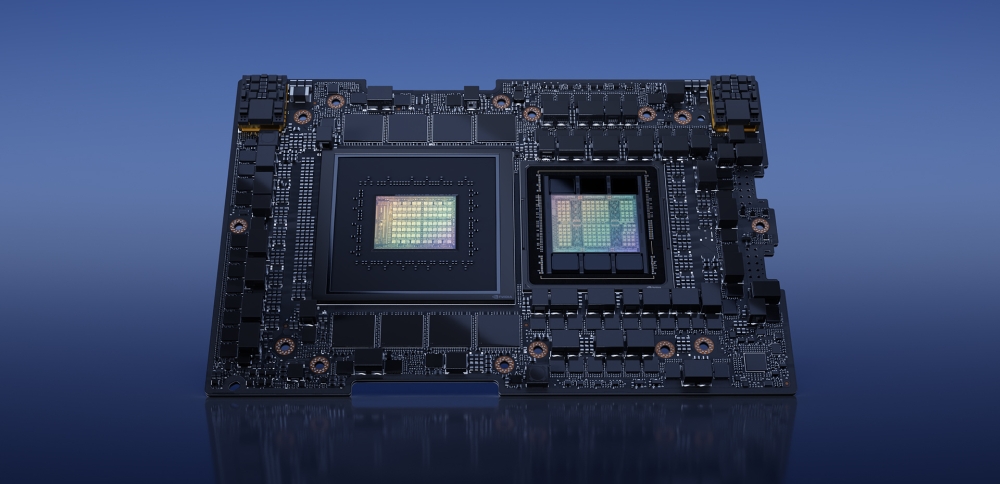

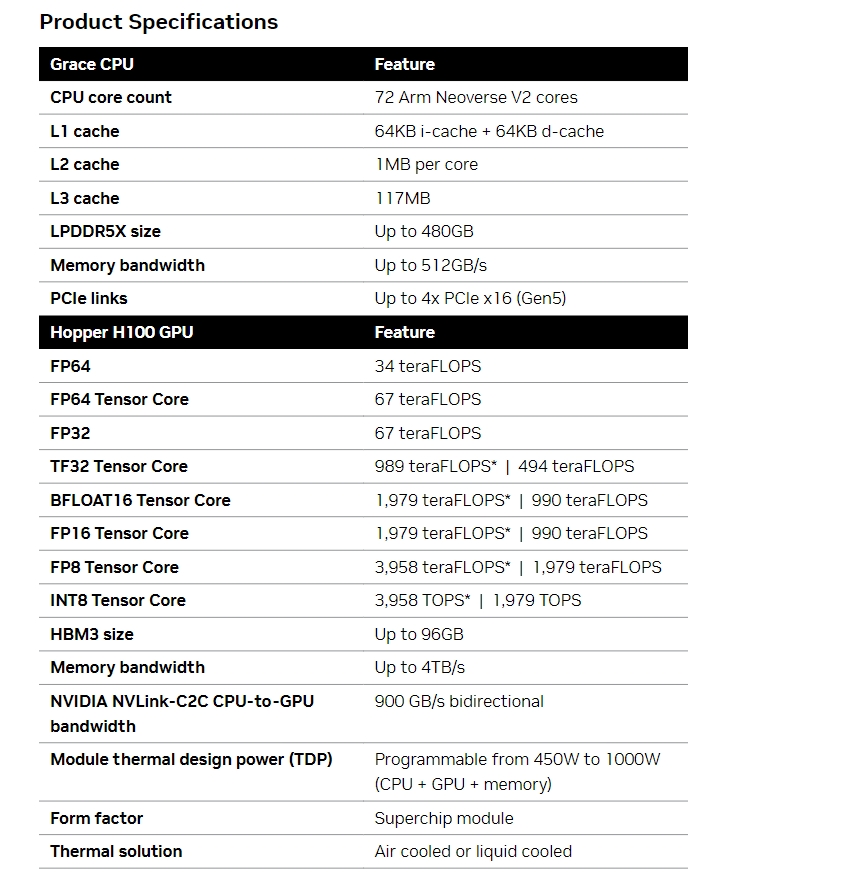

The GH200 Grace Hopper Superchip combines the ARM-based Nvidia Grace CPU and Hopper GPU architectures, connecting them with Nvidia NVLink-C2C interconnect, which delivers up to 900GB/s of total bandwidth.

“Generative AI is rapidly transforming businesses, unlocking new opportunities and accelerating discovery in healthcare, finance, business services and many more industries,” said Ian Buck, vice president of accelerated computing at NVIDIA. “With Grace Hopper Superchips in full production, manufacturers worldwide will soon provide the accelerated infrastructure enterprises need to build and deploy generative AI applications that leverage their unique proprietary data.”

Nvidia was keen to note that global hyperscalers and supercomputing centers in Europe and the U.S. are among several customers that will have access to GH200-powered systems. Some of the partners that were mentioned at the Nvidia event include AAEON, Advantech, Aetina, ASRock Rack, ASUS, GIGABYTE, Ingrasys, Inventec, Pegatron, QCT, Tyan, Wistron and Wiwynn. Global server manufacturers, like Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo, Supermicro and Eviden, and Atos company are also on the list, as well as Cloud partners for Nvidia H100 which include Amazon Web Services (AWS), Cirrascale, CoreWeave, Google Cloud, Lambda, Microsoft Azure, Oracle Cloud Infrastructure, Paperspace and Vultr.

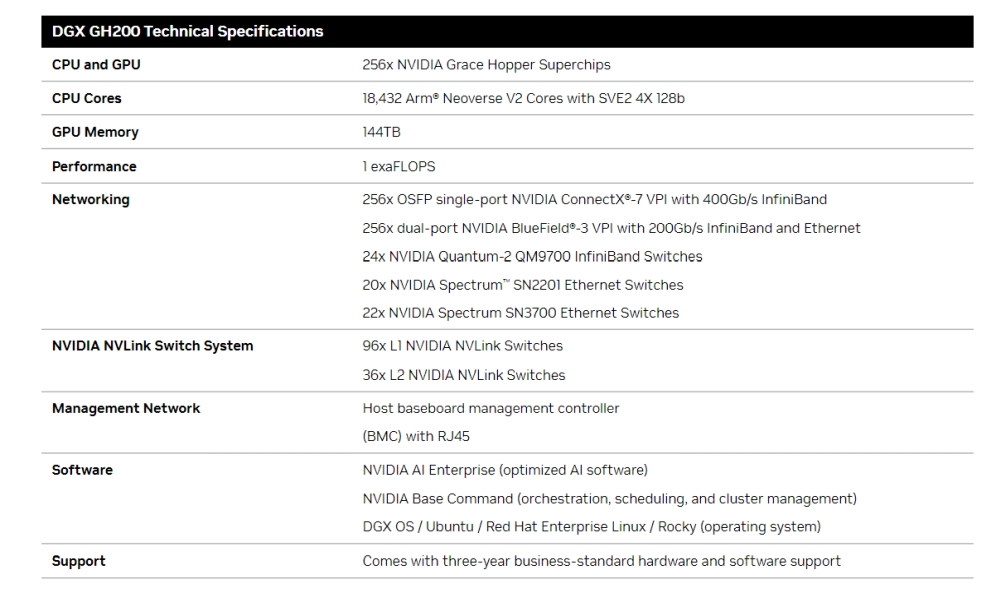

Nvidia also announced the DGX GH200 AI Supercomputer, a "new class of AI supercomputer" which connects 256 GH200 Grace Hopper Superchips into a massive 1-Exaflop, 144TB GPU supercomputer for generative AI workloads.

In terms of other specifications and in addition to 256 Nvidia Grace Hopper Superchips and 144TB of GPU memory, it packs 18,432 Arm Neoverse V2 cores, a bunch of networking connects and switches, 96 L1 and 36 L2 Nvidia NVLink Switches, and more.

“Generative AI, large language models and recommender systems are the digital engines of the modern economy,” said Jensen Huang, founder and CEO of NVIDIA. “DGX GH200 AI supercomputers integrate NVIDIA’s most advanced accelerated computing and networking technologies to expand the frontier of AI.”

Google Cloud, Meta, and Microsoft will be the first to gain access to the DGX GH200, and Nvidia is also building its own DGX GH200-based AI supercomputer. Named Nvidia Helios, it will have four DGX GH200 systems, each connected via Quantum-2 InfiniBand networking, adding up to 1,024 Grace Hopper Superchips. Nvidia expects it to come online by the end of this year.

Both systems with Nvidia GH200 Grace Hopper Superchips and the DGX GH200 supercomputers are expected to be available later this year.