Companies will be able to use both these technologies on premise AI implementations and essentially this service may well be offered via IBM's could offerings.

Implementing AI is not a walk in the park as it addresses the challenges organizations face experimenting with a "proof of concept" and, later, production for enterprise scaling . Making an AI enterprise solution involves a team that includes data engineers, data scientists, business analyst developesr to deploy, app developers for model and developer operator to monitor the whole thing.

Choosing the right hardware, installing Linux or another operating system, installing and compiling frameworks and the necessary software is way too complicated for most enterprises. Enterprise need much easier almost like a flip switch solution.

When it comes to AI infrastructure, the stack is also complex. It involves applications and micro services specific to the industry segment. The second layer is the AI API such as Watson or in-house APIs custom models for acceleration of speech, vision, NLP or sentiments.

The third layer involves machine and deep learning libraries and frameworks such as TensoFlow, Caffe, SparkML or H20. The fourth layer is distributed computing that involves Spark or MPI and finally you get to the "data lake" and the data storage layer that involves Hadoop HDFS, NoSQL DBs in order to transform and prepare your data.

Of course, you need accelerated servers and storage as the part of accelerated infrastructure. Convincing your business manager that this is a good idea is another level of complexity on its own, as it will take quite some time until you get meaningful results you can include in your enterprise workflow and essentially save money.

PowerAI - an integrated platform that just works

IBM’s answer for these probles for enterprise customers is called PowerAI Enterprise and an on-premises AI infrastructure reference architecture will help organizations jump-start AI and deep learning projects. The new set of tools will help to remove the obstacles to moving from experimentation to production and ultimately to enterprise-scale AI.

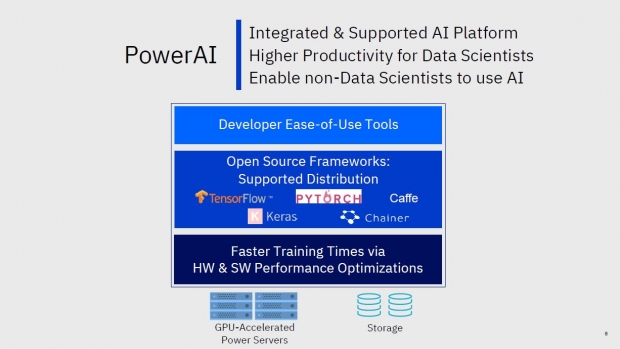

The PowerAI will be a set of developer easy to use tools sitting on top of open source frameworks including TensorFlow, Pytorch, Caffe, keras and Chainer. This will allow faster training times via hardware and software performance optimizations. IBM claims it has the tools to offer CPU as well as GPU accelerated power servers, as well as the necessary storage solutions. IBM has an AC922 platform with six watercooled Volta V100 32 GB cards and 2x16 Power 9 cores that we described here.

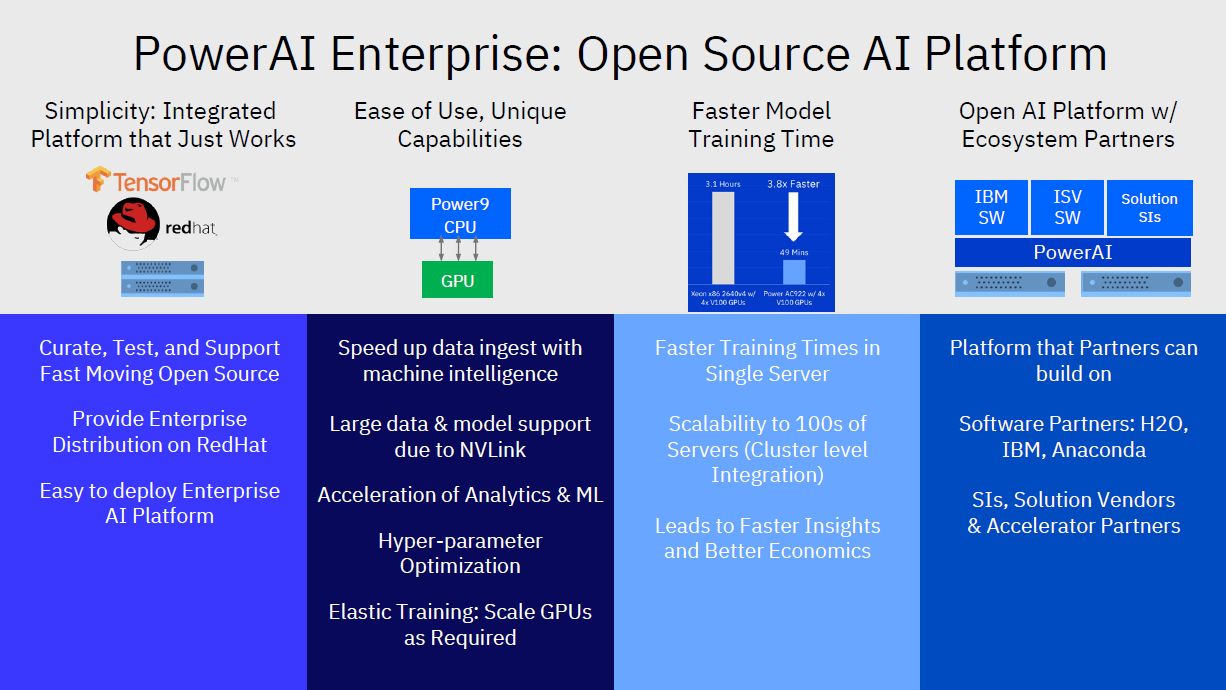

PowerAI Enterprise is an open source AI platform that emphases simplicity with an integrated platform that it claims just works. The combination of TensorFlow framework and RedHat Enterprise distribution will help customers to curate, test, support and move faster to an open source AI environment, said Big Blue.

The capability of Power9 GPU and Nvidia GPUs in Power 9 machines can speed up date ingestion using machine intelligence, large data and model support with super-fast interconnect such as NVLink and create the acceleration of analytics and machine learning. The platform has the ability for the hyper parameter optimization as well as "elastic" training cases via GPUs.

The Power AI Enterprise allows for an open source AI platform with fast model training times on a single server, says the firm. This task can be easily scaled to 100 server on a cluster level of integration. This will lead to faster insights and better economics, said the company.

The Open AI Platform with "ecosystem" partners will create a mix of IBM's software stuff, independent software vendors (ISVs) and system integrator answers all running on Power 9 hardware.

Experimentation – Production - Expansion

The benefit - said IBM - is that this is a platform that partners can build on and it has software partners that include H2O and Anaconda.

The reference architecture has been designed for real-world problems and AI workloads using Spectrum Computing and IBM Storage for improved AI performance, throughput, user productivity and unified data management across the AI workflow.

Clients like Wells Fargo are using it already for financial risk modeling. The automotive industry is using it for IoT sensory data and the top five global bands intend to use it to build a better client profile using Spark and ML. The Coral National Lab supercomputer, said to be the most powerful and fastest supercomputer in the world is powered by tBig Blue's gear and it is bespoke for AI workloads.

The bottom line - said Big Blue - is that Power AI Enterprise will get enterprise clients faster to the speed with the whole AI ready platform with both hardware and software, so they can quickly go from an AI experimentation phase to the production phase that helps them save or make money. After that, the ultimate goal is an expansion phase as IBM Power AI Enterprise is built to scale. IBM tells us that you can find some additional details on the company's blog.