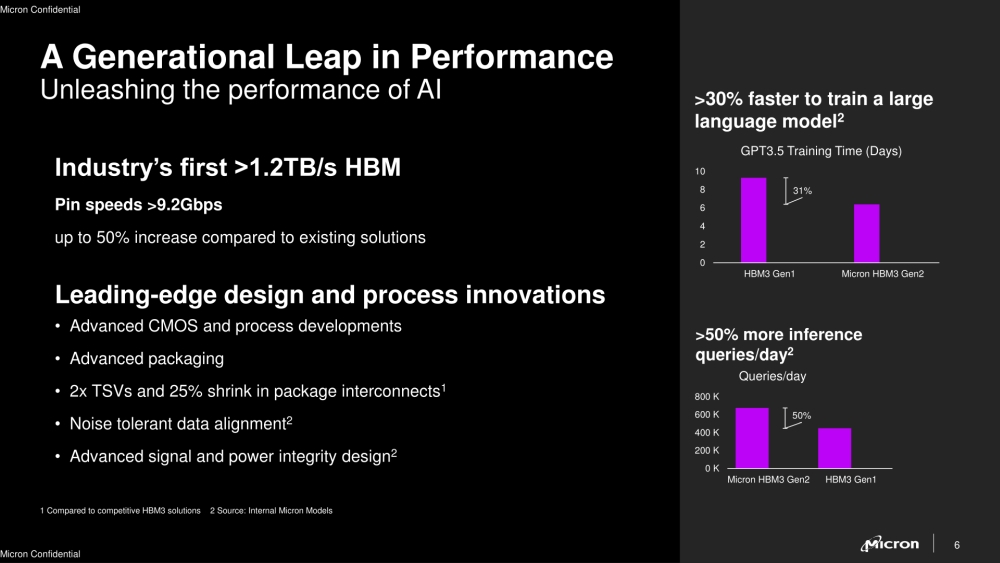

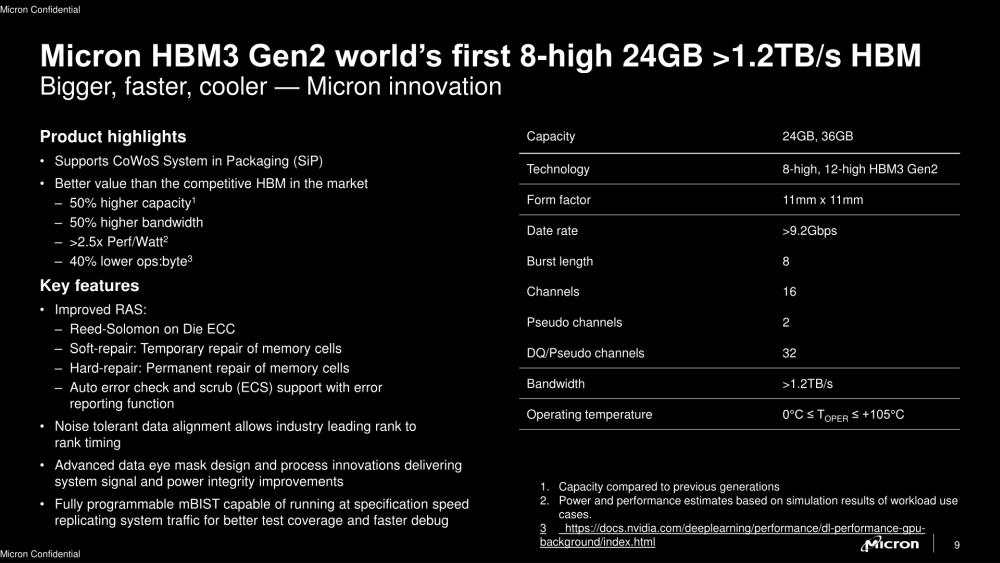

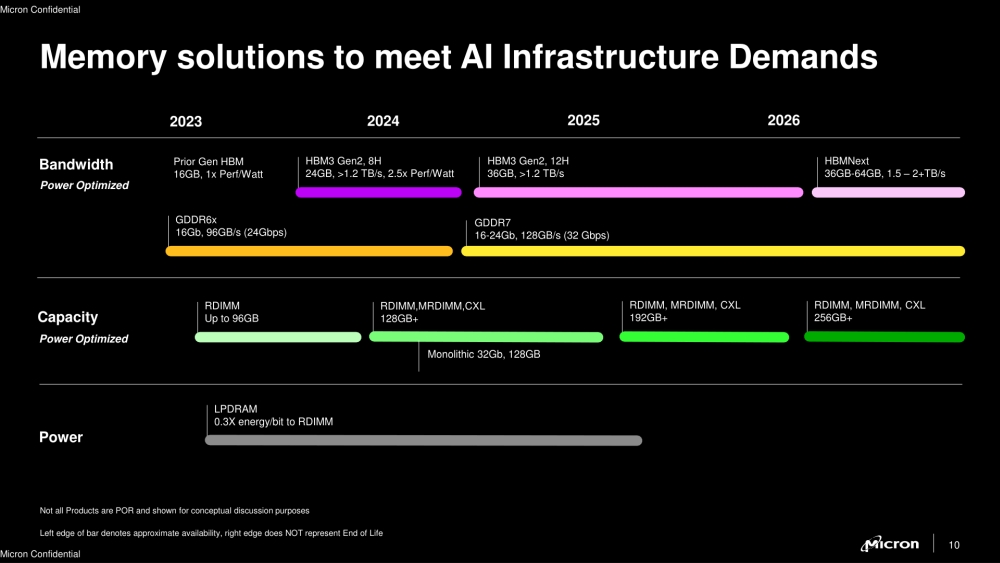

Micron was keen to note that it all comes from its own industry-leading 1β (1-beta) DRAM process node, allowing it to pack 24Gb DRAM die into an 8-high cube. This will also bring a 12-high stack with 36GB capacity in Q1 2024. In addition to the 50 percent improvement in bandwidth and a pin speed of over 9.2Gb/s, compared to the currently available HBM3, the new HBM3 Gen2 also brings a 2.5 times performance per watt compared to the previous generations.

Driven by the artificial intelligence (AI) data center market, with a constant push for higher performance, capacity, and power efficiency, these Micron HBM advancements reduce training times of large language models, as well as provide superior TCO, according to Micron.

“Micron’s HBM3 Gen2 technology was developed with a focus on unleashing superior AI and high-performance computing solutions for our customers and the industry,” said Praveen Vaidyanathan, vice president and general manager of Micron’s Compute Products Group. “One important criterion for us has been the ease of integrating our HBM3 Gen2 product into our customers’ platforms. A fully programmable Memory Built-In Self Test (MBIST) that can run at the full specification pin speed positions us for improved testing capability with our customers, creates more efficient collaboration and delivers a faster time to market.”

"At the core of generative AI is accelerated computing, which benefits from HBM high bandwidth with energy efficiency,” said Ian Buck, vice president of Hyperscale and HPC Computing at NVIDIA. “We have a long history of collaborating with Micron across a wide range of products and are eager to be working with them on HBM3 Gen2 to supercharge AI innovation.”

Previously, Micron announced its 1α (1-alpha) 24Gb monolithic DRAM die-based 96GB DDR5 modules for capacity-hungry server solutions and today introduced the1β 24Gb die-based 24GB HBM3 offering. It plans to bring its 1β 32Gb monolithic DRAM die-based 128GB DDR5 modules in the first half of calendar 2024.