If you have been following Nvidia closely, last year it managed to ship the P100 based cards and systems in late September. Even then, a Nvidia DGX-1 system packed with eight GPUs and quite an edgy price of $100,000 was hard to get last year. This year the system packed with eight Volta GPUs is even more expensive.

Nvidia’s secret sauce is Artificial Intelligence ( AI). Nvidia realized that there are so many tasks where special accelerators based on a GPU architecture can do a much better job compare to a standard CPU multitasking approach.

Nvidia's stock skyrocketed to current $162.51 as Jensen and his capable team has convinced investors and Wall Street that AI is the sexiest date in town. AI also needs to learn self-driving cars driving and we can thank visionaries such as Elon "never meets the deadline" Musk that Wall Street thinks it now gets it.

So there is quite some demand for Volta based cards and compute systems, and the reason is rather simple. You can calculate and do the training of the AI and machine learning systems much faster with a new GPU.

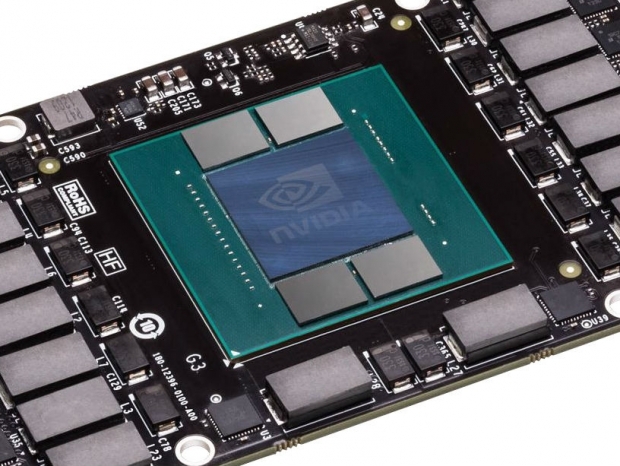

The new Volta V100 GPU has 640 special Tensor Cores that are packed with matrix calculation, especially meant to speed up AI training related tasks. This is why Volta will be more efficient compared to the previous P100 generation of AI accelerators or the competition.

Volta V100 is not a high volume product as its task is very specific and narrow. Many researchers will definitely want to get some, and an Nvidia cloud based business model for AI will definitely get some traction. The big question is how much more training horse power are we going to need and when will demand start decreasing?

It looks like AI is in a very early phase and that there is a lot of room for growth, at least in the near future. Today we had a chance to see the DGX station in a live demo, an acceleration ray tracing demo, in real time, so even months after the announcement, Nvidia found a way to deploy this for real life workloads.