The new AMD CDNA 2 architecture is obviously a direct evolution of the original CDNA architecture, which draws its roots from the original GCN, the same one that has given us the RDNA architecture for consumer GPUs.

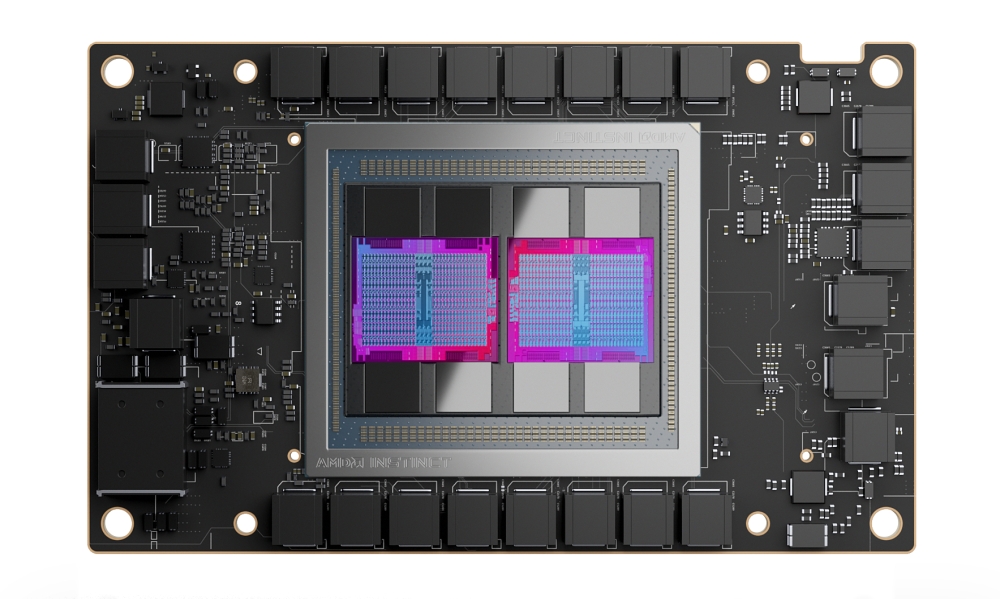

The new CDNA 2 die is built on TSCM's N6 manufacturing process (6nm) and packs a total of 29b transistors, at least from what we can gather from AMD's Instinct MI200 announcement. Unfortunately, there are no official details about the die size, but it appears that the GPU packs 112 Compute Units (CUs), packed in 4 Compute Engines, and paired up with eight Infinity Fabric Links and four HBM2E memory controllers.

AMD Instinct MI200 series, world's most advanced data center accelerator

The AMD Instinct MI200 series has a lot to brag about, including the new CDNA 2 workload-optimized compute architecture, 3rd gen Infinity architecture support, and being the first multi-die GPU.

The AMD Instinct MI200 series will include a total of three different accelerators, including the flagship MI250X and the MI250, both of which are shipping today, and the MI200 PCIe series with MI210, which is coming soon.

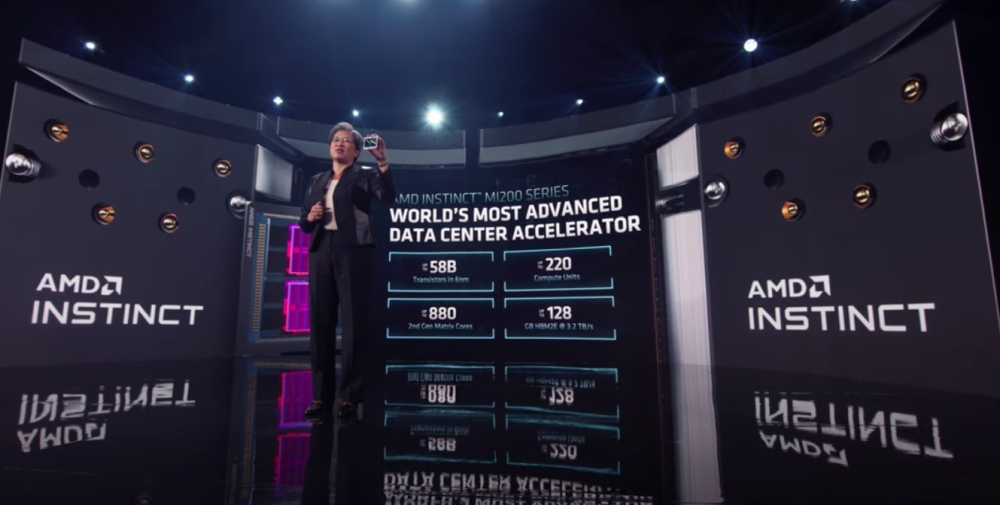

The MI250X will pack two CDNA 2 GPUs with 220 CUs (110 per GPU), 880 2nd gen Matrix Cores (440 per GPU), and a total of 128GB of HBM2E at 3.2 TB/s. The two GPUs are linked with AMD's Infinity Fabric, or four of those, offering up to 100GB/s of bi-directional bandwidth between GPUs.

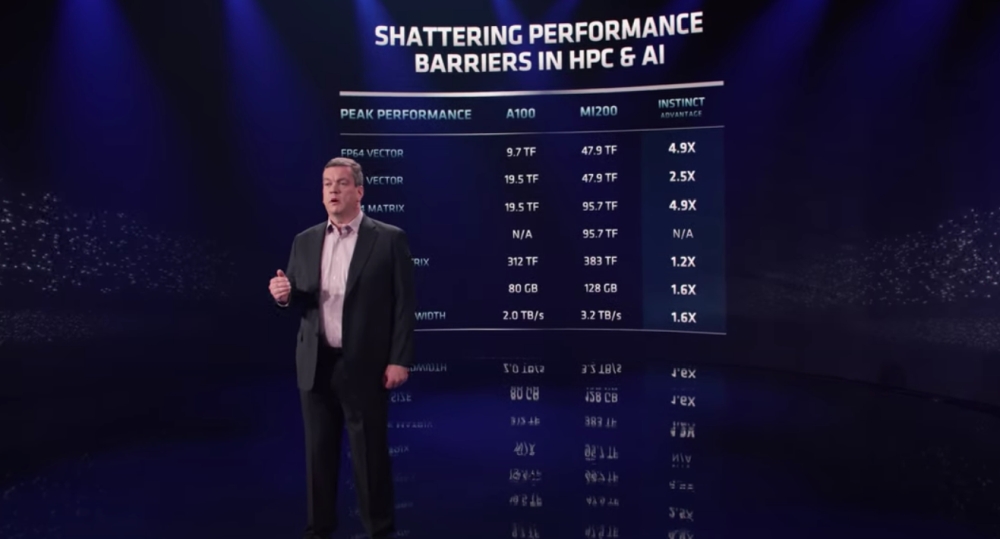

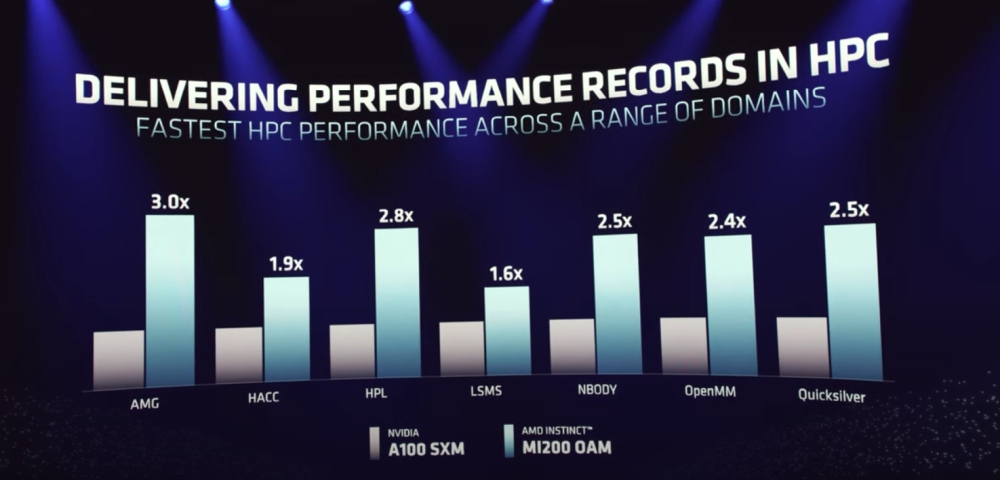

All of this is enough for 47.9 TFLOPS of FP64 Vector compute performance, and 95.7TFLOPS of FP64 Matrix/Tensor compute performance. AMD was keen to compare it to the Nvidia A100, showing an advantage of 4.9x in both FP64 Vector and FP64 Matrix/tensor performance, as well as 1.6x higher memory amount and bandwidth.

The Instinct MI250 is somewhat a cut-down version, offering 104 CUs per GPU, but it retains 128GB of 3.2TB/s HBM2E memory, at least as far as we could gather from

Both the MI250X and the MI200 are based on the Open Compute Project Accelerator Module (OAM) form factor, and AMD has some details about the both mechanical and thermal design of the module. Since four Infinity Fabric 3.0 links are used between GPUs, this leaves four of those 25GB/s links (100GB/s of bi-directional bandwidth) for linking with other hosts or accelerators.

Shipping to Frontier supercomputer, coming in Q1 2022 for everyone else

AMD was keen to note that the Instinct MI200 series is already shipping, but only to the Frontier supercomputer, one that AMD is doing with the US Department of Energy.

As far as the rest of the customers, the MI200 will be available in Q1 2022, and all the usual partners are on the list.