Powered by a Xilinx automotive platform consisting of system-on-a-chip (SoC) devices and AI acceleration software, the scalable solution will deliver high performance, low latency and best power efficiency for embedded AI in automotive applications today.

There are not many additional details about what exactly it will be working on, it is apparently too early for these details. Daimler AG recognized the Xilinx’s advancements and the fact that the company managed to ship 40 million cumulative automotive units to automakers and Tier 1 suppliers in the last twelve years. Some of their solutions like FPGA or Over the Air FPGA based silicon where hardware and software can be updated over the air are definitely unique compared to its competitors. You can simply add features to the hardware, something you cannot do in ASIC /SoC approach.

We spoke with Willard Tu, senior director about automotive who went into details how Low latency and high throughput of the FPGA are making big advantages over the GPU based solutions.

No latency with consistent compute efficiency

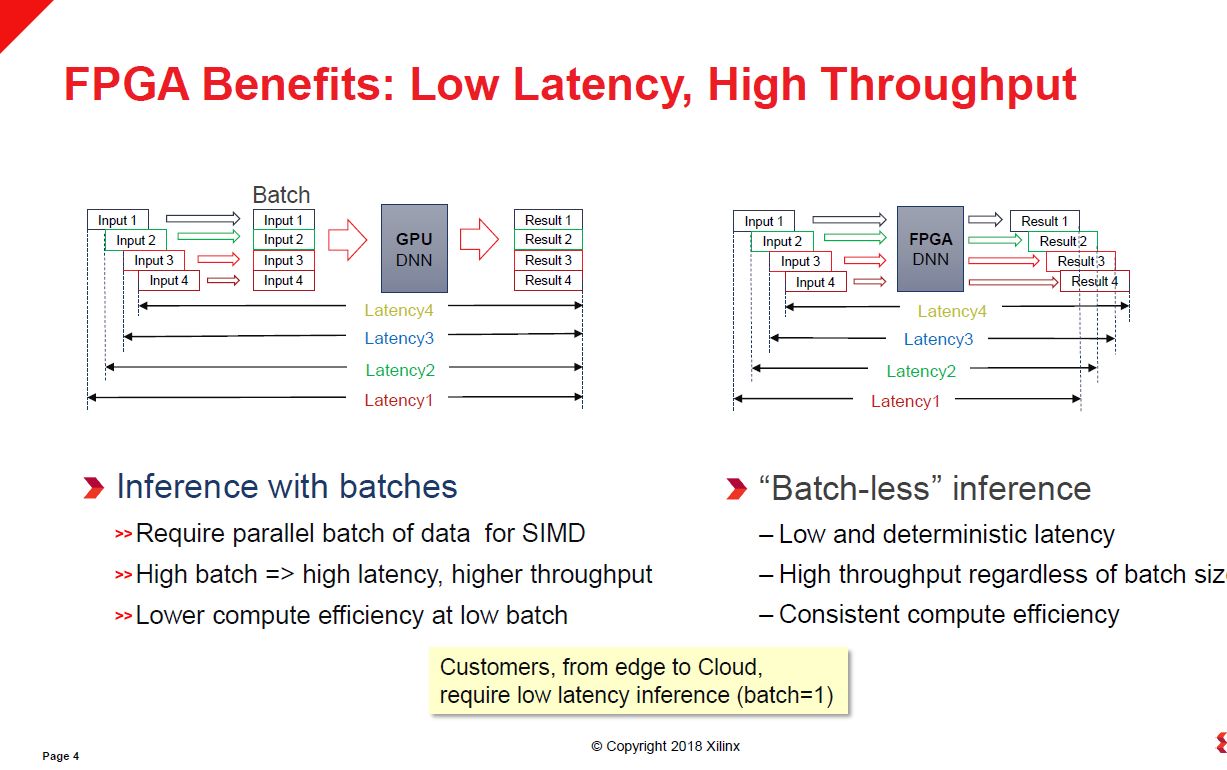

Using a GPU based system with inference with batches you end up with some serious latency constrain and batching issues.

The GPU requires parallel batches of data for SIMD with the disadvantage that high batch will cause a high latency as well as higher throughput.

At low data batch you end up having lower computer efficiency using GPU based solutions.

Batching can be described as a waiting in line or as brits like to say queue. The GPU waits for a batch of four data points and then releases it to the GPU. The process it parallel, but you have to wait for the data to arrive. These batching data from different input can be picture frames from the front or any surrounding cameras. Having a delay with this data sounds very dangerous as it might cause a delay that could cause a system to fail to recognize an object and result in an otherwise preventable accident.

FPGA little of no latency

With FPGA solution you score a batchless inference with low and deterministic latency. Programmers like this especially in mission critical systems. You don’t want the latency to cause that an on-board computer doesn’t recognize something as crucial as an unusually looking pedestrian on the street, let’s say a mascot. Remember the recent Uber pedestrian fatal accident when she was walking with the bike - the system recognized the object but classified it as a false positive. This is something that should be preventable.

FPGA can process data at batchless inference or having almost identical latency to the first batch, let’s call it batch 1. GPU increases the latency with every input and batch, while FPGA always stays at batch 1 and manages to push the data via the pipeline, with little or the same latency.

The high throughput can be achieved regardless of batch size. This is not the case with a GPU approach - massive input from, let’s say, 12 cameras, few lidars and few radar with huge amounts of data could cause some significant latencies.

What you get using the FGPA is the low latency with batch-less inference with high throughput. More importantly, vendors are after consistent compute efficiency.

“We are accelerating our product development using AI technology by engaging our global development centers with Xilinx experts. Through this strategic collaboration, Xilinx is providing technology that will enable us to deliver very low latency and power-efficient solutions for vehicle systems which must operate in thermally constrained environments. We have been very impressed with Xilinx’s heritage and selected the company as a trusted partner for our future products”, said Georges Massing, director user interaction & software, Daimler AG.

As part of the strategic collaboration, deep learning experts from the Mercedes-Benz Research and Development centers in Sindelfingen, Germany and Bangalore, India are implementing their AI algorithms on a highly adaptable automotive platform from Xilinx. Mercedes-Benz will productize Xilinx’s AI processor technology, enabling the most efficient execution of their neural networks.

“We are proud to announce this collaboration with Daimler on advanced AI applications. Our adaptable acceleration platform for automotive offers industry leaders like Daimler a high level of flexibility for innovation in deploying neural networks for intelligent vehicle systems", said Willard Tu, senior director, Automotive, Xilinx.