However this week Nvidia has blogged that Google’s numbers fail to take into account how wonderful its new boards are.

Google compared its board to the older, Kepler-based, dual-GPU K80 rather than the Pascal based GPUs.

Nvidia moaned that Google’s team released technical information about the benefits of TPUs this past week but did not compare the TPU to the current generation Pascal-based P40.

While the TPU has 13x the performance of K80 is provisionally true, but there’s a snag. That 13x figure is the geometric mean of all the various workloads combined.

Nvidia’s argument is that Pascal has a much higher memory bandwidth and far more resources for inference performance than K80. As a result, the P40 offers 26x more inference performance than one die of a K80.

As Extreme Tech points out there are all sorts of things which are “unclear” about Nivida’s claims.

For example it is unclear if Nvidia’s claim takes Google’s tight latency caps into account. At the small batch sizes Google requires for its 8ms latency threshold, K80 utilization is just 37 percent of maximum theoretical performance. The vagueness of the claims make it difficult to evaluate them for accuracy.

Google’s enormous lead in incremental performance per watt will be difficult to overcome. Google said that its boffins modelled the expected performance improvement of a TPU with GDDR5 instead of DDR3, with more memory bandwidth.

Scaling memory bandwidth up by 4x would improve overall performance by 3x, at the cost of ~10% more die space. So, it is saying that it can boost the TPU side of the equation as well.

While no one is saying that the P40 is slower than the K80, but Google’s data shows a huge advantage for TPU performance-per-watt compared with GPUs, particularly once host server power is subtracted from the equation.

Basically GPU has lots of hardware that a chip like Google’s TPU simply doesn’t need.

Published in

Graphics

Nvidia claims it can beat Google’s tensor processing unit

It compared it against the wrong Nvidia card

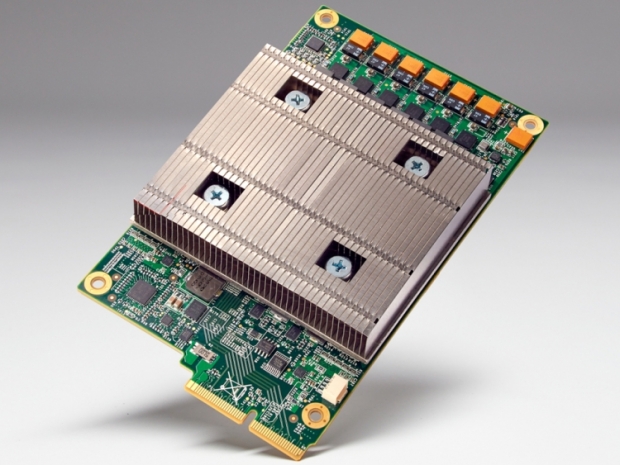

Google’s internal benchmarks of its own TPU, or tensor processing unit, indicated that its purpose built AI board cleaned Nvidia’s clock when it came to number crunching and power consumption.