According to a research paper published in Computing and Software for Big Science, a team of boffins at CERN suggested a novel way to process enormous dataflow from the particle detector.

The big idea was is to provide the collaboration with a robust, efficient and flexible solution that could deal with increased data flow expected during the upcoming data-taking period. This solution is not only much cheaper, but it will help decrease the cluster size and process data at speeds up to 40 Tbit/s.

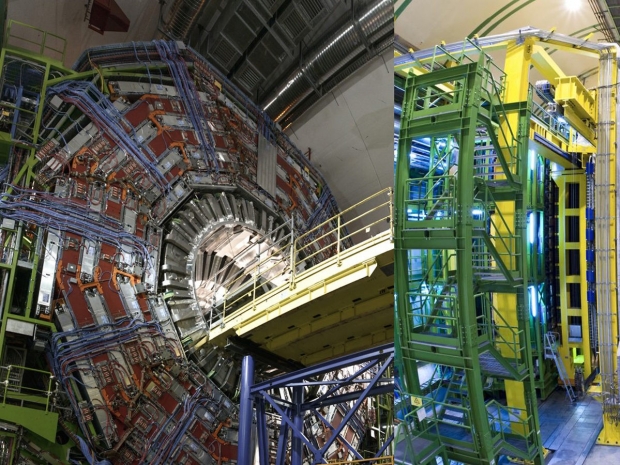

The LHC and LHCb in particular were created for the purpose of searching for "new physics", something beyond the Standard Model. While the research achieved moderate success, hopes of finding completely new particles, such as WIMPs, have failed.

Many physicists believe that in order to achieve new results, statistics on particle collision at the LHC should be increased considerably. But this not only requires new accelerating equipment—upgrades are currently underway and due to be completed by 2021-2022—but also brand-new systems to process particle collision data. To detect the events on LHCb as correctly registered, the reconstructed track must match the one modeled by the algorithm. If there is no match, the data are excluded. About 70 percent of all collisions in the LHC are excluded this way, which means that serious calculation capacities are required for this preliminary analysis.

A group of researchers, including Andrey Ustyuzhanin, Mikhail Belous and Sergei Popov from HSE University came up with an algorithm of a farm of GPUs as a first high-level trigger (HLT1) for event registration and detection on the LHCb detector. The concept has been named Allen, after Frances Allen, a researcher in computational system theory and the first woman to have received the Turing Award.

Unlike previous triggers, the new system transfers data from CPUs to GPUs Teslas or oridinary gaming GPUs from Nvidia or AMD. The Allen trigger does not depend on one equipment vendor, which makes it easier to create and reduces costs. With the highest-performance systems, the trigger can process data at up to 40 Tbit/s.

In a standard scheme, information on all events goes from the detector to a zero-level (L0) trigger, which consists of programmable chips (FPGA). They perform selection at the basic level. In the new scheme, there will be no L0 trigger. The data immediately go to the farm, where each of the 300 GPUs simultaneously processes millions of events per second.

After initial event registration and detection, only the selected data with valuable physical information go to ordinary x86 processors of second-level triggers (HLT2). This means that the main computational load related to event classification happens at the farm by means of GPUs exceptionally.

This framework will help solve the event analysis and selection tasks more effectively: GPUs are initially created as a multi-channel system with multiple cores. And while CPUs are geared towards consecutive information processing, GPUs are used for massive simultaneous calculations. In addition, they have a more specific and limited set of tasks, which adds to performance.

Allen will cost significantly less than a similar system on CPUs. It will also be simpler than the previous event registration systems at accelerators.